In Depth MIDI File Operations in C#

Updated on 2020-03-26

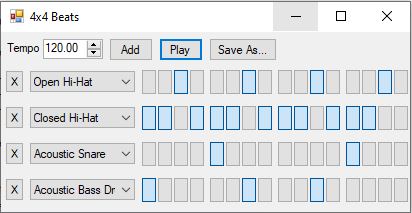

An in depth guide on doing advanced MIDI things. Includes a demo Drum step sequencer and file slicer

Introduction

This article aims to teach you, gentle reader, how to use my MIDI library, named simply "Midi" to generate and modify MIDI sequences. It also aims to explain the core of the MIDI protocol and file format so that you fully understand what's going on. This library lets you get low level with the structure of MIDI streams, and you often deal with messages directly so it's helpful to understand how to use it. There are other offerings out there that do higher level MIDI manipulation, but they don't typically give you the raw access like this library does.

Update: Doubled length of sequencer. Added some comments, and minor improvements

Update 2: Added Bars option to FourByFour

Conceptualizing this Mess

MIDI stands for Musical Instrument Digital Interface. It is an 8-bit big-endian wire protocol that was developed in the 1980s to supplant more traditionally analog ways of controlling things like synthesizers, which previously used "voltage controlled" parameters to allow for changing them based on things like foot pedal controllers. It also added tons of extensible controller ids and various other features to greatly expand the musicians capacity to control digital instruments like synthesizers and MIDI capable pianos and drum machines, and allow them to play back musical scores sequenced by the artist, whether recorded in real time using a MIDI capable controller device like an electronic keyboard, played back from a file, or even generated through some other means.

Essentially, MIDI seeks to allow a musician to control every aspect of a performance, including controlling multiple instruments, adjusting things like pan and vibrato, and precisely playing notes at the velocity and time the musician desires. It's also a producer's best friend, as it can automate things like mixer boards. Some MIDI applications even control lighting at shows along with the musical performance and mixing data!

The protocol is extensible enough that it was only recently updated to a 2.0 specification after almost 35 years of MIDI 1.0. Since virtually all instruments support MIDI 1.0 and almost none support 2.0, this library works with 1.0 only.

The MIDI Wire-Protocol

MIDI works using "messages" which tell an instrument what to do. MIDI messages are divided into two types: channel messages and system messages. Channel messages make up the bulk of the data stream and carry performance information, while system messages control global/ambient settings.

A channel message is called a channel message because it is targeted to a particular channel. Each channel can control its own instrument and up to 16 channels are available, with channel #10 (zero based index 9) being a special channel that always carries percussion information, and the other channels being mapped to arbitrary devices. This means the MIDI protocol is capable of communicating with up to 16 individual devices at once.

A system message is called a system message because it controls global/ambient settings that apply to all channels. One example is sending proprietary information to a particular piece of hardware, which is done through a "system exclusive" or "sysex" message. Another example is the special information included in MIDI files (but not present in the wire protocol) such as the tempo to play the file back at. Another example of a system message is a "system realtime message" which allows access to the transport features (play, stop, continue and setting the timing for transport devices)

Each MIDI message has a "status byte" associated with it. This is usually** the first byte in a MIDI message. The status byte contains the message id in the high nibble (4-bits) and the target channel in the low nibble. Ergo, the status byte 0xC5 indicates a channel message type of 0xC and a target channel of 0x5. The high nibble must be 0x8 or greater for reasons. If the high nibble is 0xF this is a system message, and the entire status byte is the message id since there is no channel. For example, 0xFF is a message id for a MIDI "meta event" message that can be found in MIDI files. Once again, the low nibble is part of the status if the high nibble is 0xF.

** due to an optimization of the protocol, it is possible that the status byte is omitted in which case the status byte from the previous message is used. This allows for "runs" of messages with the same status but different parameters to be sent without repeating the redundant byte for each message.

The following channel messages are available:

0x8 Note Off- Releases the specified note. The velocity is included in this message but not used. All notes with the specified note id are released, so if there are two Note Ons followed by one Note Off for C#4 all of the C#4 notes on that channel are released. This message is 3 bytes in length, including the status byte. The 2nd byte is the note id (0-0x7F/127), and the 3rd is the velocity (0-0x7F/127). The velocity is virtually never respected for a note off message. I'm not sure why it exists. Nothing I've ever encountered uses it. It's usually set to zero, or perhaps the same note velocity for the corresponding note on. It really doesn't matter.0x9 Note On- Strikes and holds the specified note until a corresponding note off message is found. This message is 3 bytes in length, including the status byte. The parameters are the same as note off.0xA Key Pressure/Aftertouch- Indicates the pressure that the key is being held down at. This is usually for higher end keyboards that support it, to give an after effect when a note is held depending on the pressure it is held at. This message is 3 bytes in length, including the status byte. The 2nd byte is the note id (0-0x7F/127) while the 3rd is the pressure (0-0x7F/127)0xB Control Change- Indicates that a controller value is to be changed to the specified value. Controllers are different for different instruments, but there are standard control codes for common controls like panning. This message is 3 bytes in length, including the status byte. The 2nd byte is the control id. There are common ids like panning (0x0A/10) and volume (7) and many that are just custom, often hardware specific or customizably mapped in your hardware to different parameters. There's a table of standard and available custom codes here. The 3rd byte is the value (0-0x7F/127) whose meaning depends heavily on what the 2nd byte is.0xC Patch/Program Change- Some devices have multiple different "programs" or settings that produce different sounds. For example, your synthesizer may have a program to emulate an electric piano and one to emulate a string ensemble. This message allows you to set which sound is to be played by the device. This message is 2 bytes long, including the status byte. The 2nd byte is the patch/program id (0-0x7F/127)0xD Channel Pressure/Non-Polyphonic Aftertouch- This is similar to the aftertouch message, but is geared for less sophisticated instruments that don't support polyphonic aftertouch. It affects the entire channel instead of an individual key, so it affects all playing notes. It is specified as the single greatest aftertouch value for all depressed keys. This message is 2 bytes long, including the status byte. The 2nd byte is the pressure (0x7F/127)0xE Pitch Wheel Change- This indicates that the pitch wheel has moved to a new position. This generally applies an overall pitch modifier to all notes in the channel such that as the wheel is moved upward, the pitch for all playing notes is increased accordingly, and the opposite goes for moving the wheel downward. This message is 3 bytes long, including the status byte. The 2nd and 3rd byte contain the least significant 7 bits (0-0x7F/127) and the most significant 7 bits respectively, yielding a 14-bit value.

The following system messages are available (non-exhaustive):

0xF0 System Exclusive- This indicates a device specific data stream is to be sent to the MIDI output port. The length of the message varies and is bookended by the End of System Exclusive message. I'm not clear on how this is transmitted just yet, but it's different in the file format than it is over the wire, which makes it one-off. In the file, the length immediately follows the status byte and is encoded as a "variable length quantity" which is covered in a bit. Finally, the data of the specified byte length follows that.0xF7 End of System Exclusive- This indicates an end marker for a system exclusive message stream0xFF Meta Message- This is defined in MIDI files, but not in the wire-protocol. It indicates special data specific to files such as the tempo the file should be played at, plus additional information about the scores, like the name of the sequence, the names of the individual tracks, copyright notices, and even lyrics. These may be an arbitrary length. What follows the status byte is a byte indicating the "type" of the meta message, and then a "variable length quantity" that indicates the length, once again, followed by the data.

Here's a sample of what messages look like over the wire.

Note on, middle C, maximum velocity on channel 0:

90 3C 7FPatch change to 1 on channel 2:

C2 01Remember, the status byte can be omitted. Here's some note on messages to channel 0 in a run:

90 3C 7F 3F 7F 42That yields a C major chord at middle C. Each of the two messages with the status byte omitted are using the previous status byte, 0x90.

The MIDI File Format

Once you understand the MIDI wire-protocol, the file format is fairly straightforward as about 80% or more of an average MIDI file is simply MIDI messages with a timestamp on them.

MIDI files typically have a ".mid" extension, and like the wire-protocol it is a big-endian format. A MIDI file is laid out in "chunks." A "chunk" meanwhile, is a FourCC code (simply a 4 byte code in ASCII) which indicates the chunk type followed by a 4-byte integer value that indicates the length of the chunk, and then followed by a stream of bytes of the indicated length. The FourCC for the first chunk in the file is always "MThd". The FourCC for the only other relevant chunk type is "MTrk". All other chunk types are proprietary and should be ignored unless they are understood. The chunks are laid out sequentially, back to back in the file.

The first chunk, "MThd" always has its length field set to 6 bytes. The data that follows it are 3 2-byte integers. The first indicates the MIDI file type which is almost always 1 but simple files can be type 0, and there's a specialized type - type 2 - which stores patterns. The second number is the count of "tracks" in a file. A MIDI file can contain more than one track, with each track containing its own score. The third number is the "timebase" of a MIDI file (often 480) which indicates the number of MIDI "ticks" per quarter note. How much time a tick represents depends on the current tempo.

The following chunks are "MTrk" chunks or proprietary chunks. We skip proprietary chunks, and read each "MTrk" chunk we find. An "MTrk" chunk represents a single MIDI file track (explained below) - which is essentially just MIDI messages with timestamps attached to them. A MIDI message with a timestamp on it is known as a MIDI "event." Timestamps are specified in deltas, with each timestamp being the number of ticks since the last timestamp. These are encoded in a funny way in the file. It's a byproduct of the 1980s and the limited disk space and memory at the time, especially on hardware sequencers - every byte saved was important. The deltas are encoded using a "variable length quantity".

Variable length quantities are encoded as follows: They are 7 bits per byte, most significant bits first (little endian!). Each byte is high (greater than 0x7F) except the last one which must be less than 0x80. If the value is between 0 and 127, it is represented by one byte while if it was greater it would take more. Variable length quantities can in theory be any size, but in practice they must be no greater than 0xFFFFFFF - about 3.5 bytes. You can hold them with an int, but reading and writing them can be annoying.

What follows a variable length quantity delta is a MIDI message, which is at least one byte, but it will be different lengths depending on the type of message it is and some message types (meta messages and sysex messages) are variable length. It may be written without the status byte in which case the previous status byte is used. You can tell if a byte in the stream is a status byte because it will be greater than 0x7F (127) while all of the message payload will be bytes less than 0x80 (128). It's not as hard to read as it sounds. Basically for each message, you check if the byte you're on is high (> 0x7F/127) and if it is, that's your new running status byte, and the status byte for the message. If it's low, you simply consult the current status byte instead of setting it.

MIDI File Tracks

A MIDI type 1 file will usually contain multiple "tracks" (briefly mentioned above). A track usually represents a single score and multiple tracks together make up the entire performance. While this is usually laid out this way, it's actually channels, not tracks that indicate what score a particular device is to play. That is, all notes for channel 0 will be treated as part of the same score even if they are scattered throughout different tracks. Tracks are just a helpful way to organize. They don't really change the behavior of the MIDI at all. In a MIDI type 1 file - the most common type - track 0 is "special". It doesn't generally contain performance messages (channel messages). Instead, it typically contains meta information like the tempo and lyrics, while the rest of your tracks contain performance information. Laying your files out this way ensures maximum compatibility with MIDI devices out there.

Very important: A track must always end with the MIDI End of Track meta message.

Despite tracks being conceptually separate, the separation of scores is actually by channel under the covers, not by track, meaning you can have multiple tracks which when combined, represent the score for a device at a particular channel (or more than one channel). You can combine channels and tracks however you wish, just remember that all the channel messages for the same channel represent an actual score for a single device, while the tracks themselves are basically virtual/abstracted convenience items.

See this page for more information on the MIDI wire-protocol and the MIDI file format .

Now that we've covered the MIDI essentials and even some of the grotty details, let's move on to using the API.

Coding this Mess

The included Midi assembly and project exposes a generous API for working with MIDI files and individual tracks, and messages.

There are really 3 major classes and one minor one to work with, with the rest being sort of auxiliary and support.

MidiFile represents MIDI data in the MIDI file format. It is not necessarily backed by a real file. Often, these are created and discarded wholly in memory without ever writing to disk. The only reason it's suffixed with "File" is because it represents data in the MIDI file format. It includes Tracks which gets you to the individual tracks as well as many convenience members that access the all important "special" track 0 information, like Tempo, or in some cases, apply an operation to all tracks in a file, like GetRange(). The class also exposes the static ReadFrom() methods which allow you to read a MIDI file from a stream or a filepath or WriteTo() which writes the MIDI file to the specified stream or file. You can also create a new instance with the specified timebase, but note that you cannot change the timebase once it's created. You can however, call Resample() which takes a new timebase and creates a new MidiFile instance with the new timebase for you.

MidiSequence is the workhorse of the library. It handles all of the core operations. It can do things like Normalize or scale velocities using NormalizeVelocities() and ScaleVelocities(), retrieve a subset range using GetRange(), stretch or compress the timing of the sequence using Stretch(), even Merge() and Concat() sequences. You can play the sequence on the calling thread using Preview() which is useful for hearing the MIDI generated before you save it, or for simply playing it for any reason. A MidiSequence, like a track, is simply a collection of MIDI events. In fact, each MIDI track in MidiFile's "file" is represented by a MidiSequence instance. Note that most of the methods above are available on MidiFile itself as well, which works on all the tracks in the file at once. You can access the events of the sequence through the Events and AbsoluteEvents property depending on whether you want the events returned in deltas or absolute ticks. Events itself is a modifiable list, while AbsoluteEvents is a lazy computed enumeration that cannot be modified. The bottom line there is you must specify ticks in deltas when you add or insert events.

MidiEvent is a minor class that simply contains a Position as well as a Message. The position can be indicated as a delta position in ticks, or an absolute position in ticks depending on whether it was retrieved through MidiSequence's Events or AbsoluteEvents property, respectively (see above).

MidiMessage and its many derived classes such as MidiMessageNoteOn and MidiMessageNoteOff represent the individual MIDI messages supported by the wire-protocol and file format both. There are many. It should be noted that they typically derive from classes like MidiMessageByte (a message with a single byte parameter) or MidiMessageWord (a message with two byte parameters such as a note on, or a message with a 2 byte parameter such as the pitch wheel change message). You can use those low level classes directly if you like, but they're not as friendly as the higher level ones, especially when it comes to quirky or complicated messages like time signature and tempo change meta messages.

The support classes include MidiTimeSignature which represents a time signature, MidiKeySignature which represents a key signature, MidiNote, which we'll cover below, and MidiUtility which you shouldn't need that much.

Using MIDI note on/note off messages is perfect for real time performance but leaves something to be desired when it comes to higher level analysis of sequences and scores. It's often better to understand a note as something with an absolute position, a velocity and a length. MidiSequence provides the ToNoteMap() method which retrieves a list of MidiNote instances representing the notes in a sequence, complete with lengths, rather than the note on/note off paradigm. It also provides the static FromNoteMap() method which gets a sequence from a note list of MidiNotes. This can make it easier to both create and analyze scores.

Techniques With the API

Terminating Sequences/Tracks

Important: We'll start here, since this is critical. The API will usually automatically terminate sequences for you with the end of track marker when you use operations like Merge(), Concat() or GetRange(), but if you build a sequence from scratch, you will need to insert it at the end manually. While this API will basically work without it, many, if not most MIDI applications will not, so writing a file without them is essentially akin to writing a corrupt file:

track.Events.Add(new MidiEvent(0,new MidiMessageMetaEndOfTrack()));You should rarely have to do this, but again, you'll need to if you construct your sequences manually from scratch. Also, 0 will need to be adjusted to your own delta time to get the length of the track right.

Executing Sequence and File Transformations in Series

This is simple. Every time we do a transformation it yields a new object so we replace the variable each time with the new result:

// assume file (variable) is our MidiFile

// modify track #2 (index 1)

var track = file.Track[1];

track = track.NormalizeVelocities();

track = track.ScaleVelocities(.5);

track = track.Stretch(.5);

// reassign our track

file.Track[1]=track;The same basic idea works with MidiFile instances, too.

Searching or Analyzing Multiple Tracks Together

Sometimes you might need to search multiple tracks at once. While MidiFile provides ways to do this for common searches across all tracks in a file you might need to operate over a list of sequences or some other source. The solution is simple: Temporarily merge your target tracks into a new track and then operate on that. For example, say you want to find the first downbeat wherever it occurs in any of the target tracks:

// assume IList<MidiSequence> trks is declared

// and contains the list of tracks to work on

var result = MidiSequence.Merge(trks).FirstDownBeat;You can do manual searches by looping through events in the merged tracks to. This technique works for pretty much any situation. Merge() is a versatile method and it is your friend.

Inserting Absolutely Timed Events

It's often a heck of a lot easier to specify events in absolute time. The API doesn't give you a way to do this directly, but there's a way to do it indirectly:

// myTrack represents the already existing sequence

// we want to insert an absolutely timed event into

// while absoluteTicks specifies the position at

// which to insert the message, and msg contains

// the MidiMessage to insert

// create a new MidiSequence and add our absolutely

// timed event as the single event in this sequence

var newTrack = new MidiSequence();

newTrack.Events.Add(new MidiEvent(absoluteTicks, msg));

// now reassign myTrack with the result of merging

// it with newTrack:

myTrack = MidiSequence.Merge(myTrack,newTrack);First, we create a new sequence and add our absolutely timed message to it. Basically, since it's the only message, the delta is the number of ticks from zero which is the same as an absolute position. Finally, we take our current sequence and reassign it with the result of merging our current sequence with the sequence we just created. All operations return new instances. We don't modify existing instances, so we often find we are reassigning variables like this.

Creating a Note Map

An easier way to do the above, at least when dealing with notes, is to use FromNoteMap(). Basically, you just queue up a list of absolutely positioned notes and then call FromNoteMap() to get a sequence from it.

var noteMap = new List<MidiNote>();

// add a C#5 note at position zero, channel 0,

// velocity 127, length 1/8 note @ 480 timebase

noteMap.Add(new MidiNote(0,0,"C#5",127,240));

// add a D#5 note at position 960 (1/2 note in), channel 0,

// velocity 127, length 1/8 note @ 480 timebase

noteMap.Add(new MidiNote(960,0,"D#5",127,240));

// now get a MidiSequence

var seq = MidiSequence.FromNoteMap(noteMap);You can also get a note map from any sequence by calling ToNoteMap().

Looping

It can be much easier to specify our loops in beats (1/4 notes at 4/4 time), so we can multiply the number of beats we need by the MidiFile's TimeBase to get our beats, at least for 4/4. I won't cover other time signatures here as that's music theory, and beyond the scope. You'll have to deal with time signatures if you want this technique to be accurate. Anyway, it's also helpful to start looping at the FirstDownBeat or the FirstNote or at least an offset of beats from one of those locations. The difference between them is FirstDownBeat hunts for a bass/kick drum while FirstNote hunts for any note. Once we compute our offset and length, we can pass them to GetRange() in order to get a MidiSequence or MidiFile with only the specified range, optionally copying the tempo, time signature, and patches from the beginning of the sequence.

// assume file holds a MidiFile we're working with

var start = file.FirstDownBeat;

var offset = 16; // 16 beats from start @ 4/4

var length = 8; // copy 8 beats from start

// convert beats to ticks

offset *= file.TimeBase;

length *= file.TimeBase;

// get the range from the file, copying timing

// and patch info from the start of each track

file = file.GetRange(start+offset,length,true);

// file now contains an 8 beat loopPreviewing/Playing

You can play any MidiSequence or MidiFile using Preview(), but using it from the main application thread is almost never what you want, since it blocks. This is especially true when specifying the loop argument because it will hang the calling thread indefinitely while it plays forever. What you actually want to do is spawn a thread and play it on the thread. Here's a simple technique to do just that by toggling whether it's playing or not any time this code runs:

// assume a member field is declared:

// Thread _previewThread and file

// contains a MidiFile instance

// to play.

if(null==_previewThread)

{

// create a clone of file for

// thread safety. not necessary

// if "file" is never touched

// again

var f = file.Clone();

_previewThread = new Thread(() => f.Preview(0, true));

_previewThread.Start();

} else {

// kill the thread

_previewThread.Abort();

// wait for it to exit

_previewThread.Join();

// update our _previewThread

_previewThread = null;

}You can then call this code from the main thread to either start or stop playback of "file".

The Demo Projects

Check out the MidiSlicer slicer app, and FourByFour, a simple 4/4 drum step sequencer. We'll start with the drum sequencer since it's simpler:

MidiFile _CreateMidiFile()

{

var file = new MidiFile();

// we'll need a track 0 for our tempo map

var track0 = new MidiSequence();

// set the tempo at the first position

track0.Events.Add(new MidiEvent(0, new MidiMessageMetaTempo((double)TempoUpDown.Value)));

// compute the length of our loop

var len = ((int)BarsUpDown.Value) * 4 * file.TimeBase;

// add an end of track marker just so all

// of our tracks will be the loop length

track0.Events.Add(new MidiEvent(len, new MidiMessageMetaEndOfTrack()));

// here we need a track end with an

// absolute position for the MIDI end

// of track meta message. We'll use this

// later to set the length of the track

var trackEnd = new MidiSequence();

trackEnd.Events.Add(new MidiEvent(len, new MidiMessageMetaEndOfTrack()));

// add track 0 (our tempo map)

file.Tracks.Add(track0);

// create track 1 (our drum track)

var track1 = new MidiSequence();

// we're going to create a new sequence for

// each one of the drum sequencer tracks in

// the UI

var trks = new List<MidiSequence>(BeatsPanel.Controls.Count);

foreach (var ctl in BeatsPanel.Controls)

{

var beat = ctl as BeatControl;

// get the note for the drum

var note = beat.Note;

// it's easier to use a note map

// to build the drum sequence

var noteMap = new List<MidiNote>();

for (int ic = beat.Steps.Count, i = 0; i < ic; ++i)

{

// if the step is pressed create

// a note for it

if (beat.Steps[i])

noteMap.Add(new MidiNote(i * (file.TimeBase / 4),

9, note, 127, file.TimeBase / 4-1));

}

// convert the note map to a sequence

// and add it to our working tracks

trks.Add(MidiSequence.FromNoteMap(noteMap));

}

// now we merge the sequences into one

var t = MidiSequence.Merge(trks);

// we merge everything down to track 1

track1 = MidiSequence.Merge(track1, t, trackEnd);

// .. and add it to the file

file.Tracks.Add(track1);

return file;

}This is the heart of the drum sequencer, which creates a MidiFile out of the UI information. The weird part might be the endTrack. endTrack is a track with a single end of track MIDI meta message. This is so we could set an absolute time for the event in ticks, as shown earlier. We merge this down with each track we create just so we can ensure that the track ends at the right time. Otherwise, a track might end early, which isn't a killer in this case since track 0's length is already set, but we want our file to be nice and neat in case a sequencer that loads the saved file is overly sensitive and cantankerous. The rest should be self-explanatory from the comments above, hopefully.

Next, we have MidiSlicer which is a bit more complex just due to the number of features.

MidiFile _ProcessFile()

{

// first we clone the file to be safe

// that way in case there's no modifications

// specified in the UI we'll still return

// a copy.

var result = _file.Clone();

// transpose it if specified

if(0!=TransposeUpDown.Value)

result = result.Transpose((sbyte)TransposeUpDown.Value,

WrapCheckBox.Checked,!DrumsCheckBox.Checked);

// resample if specified

if (ResampleUpDown.Value != _file.TimeBase)

result = result.Resample(unchecked((short)ResampleUpDown.Value));

// compute our offset and length in ticks or beats/quarter-notes

var ofs = OffsetUpDown.Value;

var len = LengthUpDown.Value;

if (0 == UnitsCombo.SelectedIndex) // beats

{

len = Math.Min(len * _file.TimeBase, _file.Length);

ofs = Math.Min(ofs * _file.TimeBase, _file.Length);

}

switch (StartCombo.SelectedIndex)

{

case 1:

ofs += result.FirstDownBeat;

break;

case 2:

ofs += result.FirstNoteOn;

break;

}

// nseq holds our patch and timing info

var nseq = new MidiSequence();

if(0!=ofs && CopyTimingPatchCheckBox.Checked)

{

// we only want to scan until the

// first note on

// we need to check all tracks so

// we merge them into mtrk and scan

// that

var mtrk = MidiSequence.Merge(result.Tracks);

var end = mtrk.FirstNoteOn;

if (0 == end) // break later:

end = mtrk.Length;

var ins = 0;

for (int ic = mtrk.Events.Count, i = 0; i < ic; ++i)

{

var ev = mtrk.Events[i];

if (ev.Position >= end)

break;

var m = ev.Message;

switch (m.Status)

{

// the reason we don't check for MidiMessageMetaTempo

// is a user might have specified MidiMessageMeta for

// it instead. we want to handle both

case 0xFF:

var mm = m as MidiMessageMeta;

switch (mm.Data1)

{

case 0x51: // tempo

case 0x54: // smpte

if (0 == nseq.Events.Count)

nseq.Events.Add(new MidiEvent(0,ev.Message.Clone()));

else

nseq.Events.Insert(ins, new MidiEvent(0,ev.Message.Clone()));

++ins;

break;

}

break;

default:

// check if it's a patch change

if (0xC0 == (ev.Message.Status & 0xF0))

{

if (0 == nseq.Events.Count)

nseq.Events.Add(new MidiEvent(0, ev.Message.Clone()));

else

nseq.Events.Insert(ins, new MidiEvent(0, ev.Message.Clone()));

// increment the instrument count

++ins;

}

break;

}

}

// set the track to the loop length

nseq.Events.Add(new MidiEvent((int)len, new MidiMessageMetaEndOfTrack()));

}

// see if track 0 is checked

var hasTrack0 = TrackList.GetItemChecked(0);

// slice our loop out of it

if (0!=ofs || result.Length!=len)

result = result.GetRange((int)ofs, (int)len,CopyTimingPatchCheckBox.Checked,false);

// normalize it!

if (NormalizeCheckBox.Checked)

result = result.NormalizeVelocities();

// scale levels

if (1m != LevelsUpDown.Value)

result = result.ScaleVelocities((double)LevelsUpDown.Value);

// create a temporary copy of our

// track list

var l = new List<MidiSequence>(result.Tracks);

// now clear the result

result.Tracks.Clear();

for(int ic=l.Count,i=0;i<ic;++i)

{

// if the track is checked in the list

// add it back to result

if(TrackList.GetItemChecked(i))

{

result.Tracks.Add(l[i]);

}

}

if (0 < nseq.Events.Count)

{

// if we don't have track zero we insert

// one.

if(!hasTrack0)

result.Tracks.Insert(0,nseq);

else

{

// otherwise we merge with track 0

result.Tracks[0] = MidiSequence.Merge(nseq, result.Tracks[0]);

}

}

// stretch the result. we do this

// here so the track lengths are

// correct and we don't need ofs

// or len anymore

if (1m != StretchUpDown.Value)

result = result.Stretch((double)StretchUpDown.Value, AdjustTempoCheckBox.Checked);

// if merge is checked merge the

// tracks

if (MergeTracksCheckBox.Checked)

{

var trk = MidiSequence.Merge(result.Tracks);

result.Tracks.Clear();

result.Tracks.Add(trk);

}

return result;

}As you can see, MidiSlicer's file processing routine both works with an existing file, represented by the member _file and is quite a bit more complicated than the drum sequencer due to the number of UI options. Note how we've manually scanned for tempo and SMPTE messages at the beginning of the track(s). This is so we can copy them to the new file we're creating, even if the new file is at an offset and/or start position other than the beginning. We didn't have to do it this way. GetRange() can do it for us. The truth is I added that feature after I wrote this code, but I decided to leave it so you could see how to scan events for certain messages in a robust manner.

History

- 25th March, 2020 - Initial submission

- 26th March, 2020 - Doubled length of sequencer. Minor improvements

- 26th March, 2020 - Bugfix in FourByFour

- 26th March, 2020 - Added Bars option to FourByFour

- 26th March, 2020 - Slight API improvements