JSON on Fire: JSON (C++) is a Blazing JSON Library that can Run on Low Memory Devices

Updated on 2020-12-22

A JSON pull parser and a pooling in-memory tree library for modern IoT or even your PC

Introduction

Update: The Githib repository has changed to a rewritten codebase and a new API. There's a corresponding article for the new, vastly improved code here.

C++ is wonderful in that it really is code once, run anywhere - even places where .NET and Java can't go. With it, you can target many of the tiny 32-bit IoT systems out there today.

One of the things these systems don't have is a lot of RAM, so care must be taken when designing functionality that will run in these environments.

JSON is ubiquitous and connected devices often need to speak it, but a lot of libraries for it basically require you to work with in-memory JSON documents which can take quite a bit of RAM to use.

To that end, I've written a library that tries to give you a way to avoid loading entire documents, and then if you must load fragments into RAM, it can pool strings, and filter for desired fields to keep the size down.

Furthermore, it exposes all of its memory allocation to you, so you can control exactly where your JSON gets loaded and for how long. Allocation is very inexpensive, and so is freeing, so basically you allocate/query/discard and then repeat.

There is the great ArduinoJson library for Arduino devices, but it's primarily in-memory only and I think? it's tied to the Arduino SDK. It will however, compile and run on the raw Arduino SDK without anything like the ESP-IDF unlike this library. Use it if you're coding for an ATmega2560. Consider using this one if you're coding for something like a Raspberry Pi or an ESP32, or if you just need a fast lil JSON query machine in your C++ app.

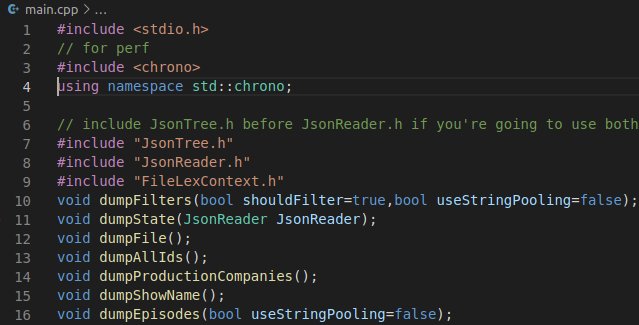

Building this Mess

Just use your favorite compiler and compile main.cpp. That's the demo file. data.json is the test data file. The rest is the library files. It's quick and dirty, but I'm working on it. I built it with GCC using VS Code as an editor. I've also built a variant of main.cpp as an ino file using the Arduino IDE and ESP32 board manager.

Disclaimers

This code does not support Unicode even though the spec calls for it. In constrained memory environments, Unicode is kind of evil and anyway lots of platforms don't fully support it in their libraries if at all so any Unicode string character outside 0-255 will be converted to ?. It was a compromise I thought deeply about, and as much as I don't like it, it's liveable. I may add Unicode compilation options in the future, but that would be a pretty substantial code update.

This code is still somewhat experimental. I haven't tested it nearly as much as I'd like, but I'm hoping to get it out there for people to bang on even as I'm still working on it, because feedback is good. I've tried to clear out segfaults and such but especially if you're dealing with non-well formed data I haven't tested those scenarios enough yet.

Conceptualizing this Mess

The Pull Parser: JsonReader.h

At the heart of this library is JsonReader, a pull parser for reading documents of virtually any size by examining small windows of them at a time. Pull parsers are extremely efficient in terms of both memory and speed, but using them can be difficult sometimes. I've added some facilities to make that easier.

Lexing

You instantiate a JsonReader over a LexContext, which is a lightweight, forward only cursor over some kind of input and an included capture buffer. JsonReader uses the LexContext both to read input from the underlying source, and to capture relevant input into a buffer for later examination. You declare a LexContext to use and give it a capacity of a capture buffer. This must be large enough to hold any single field or value you wish to examine, including escapes and quotes. 1kB might be excessive depending on what you're doing, or it might not be quite long enough for those long overview and description fields in your document. It all depends. You know the JSON source and queries you are using better than anyone, so code accordingly. I usually like to profile on a desktop with gobs of RAM to figure out what kind of space I actually need and then set my capacities accordingly. Note that this does not need to hold the longest value in the document - just the longest value that you'll actually need to examine. If you get JSON_ERROR_OUT_OF_MEMORY errors, you might need to increase it (though there are other things that can cause this, which we'll explore later.)

It should be noted that while I've provided a FileLexContext/StaticFileLexContext for reading from files, and ArduinoLexContext (where applicable) for reading from Arduino Stream interfaces, you can easily implement your own for other sources, such as a network socket. All you have to do is provide derive from LexContext, provide an initialization method of your choice such as open(), or attach() and implement int16_t read() which advances the cursor by one and returns the next character, or LexContext::EndOfInput if the source is past the end, or LexContext::Closed if closed or I/O error. Once you've done that, you can create a template class that derives from that class and StaticLexContext in order to complete your definition. See FileLexContext.hpp for a full example. It's actually very easy to do.

Reading

The main parsing method is read() which reads a single step of the parse. You can call this in a while loop in order to retrieve all the data, one element at a time. At each point, you can query the parser for its information

Querying the Parser

On any node, you call various getter methods to get information about the parsing state, like what type of node the document is on, or what the current value under the logical document cursor is:

nodeType(): Gets the type of node the parser is examining. This can beJsonReader::Initial,JsonReader::Value,JsonReader::Field,JsonReader::Array,JsonReader::EndArray,JsonReader::Object,JsonReader::EndObject,JsonReader::EndDocument, orJsonReader::Errorvalue(): Gets the node's value as a null terminated string. This is only valid for node typesJsonReader::Valuewhere it indicates the string value of the scalar field or array element,JsonReader::Fieldwhere it indicates the field name, andJsonReader::Errorwhere it indicates the error message.valueType(): Gets the intrinsic type of the value under the cursor. This is only valid for nodes which have avalue(). It will beJsonReader::Unknownfor anything else. Valid types areJsonReader::Nullfornull,JsonReader::Booleanfortrueorfalse,JsonReader::Stringfor a string, orJsonReader::Numberfor a numeric value.isIntegerValue()indicates whether or not the value under the cursor (if there is one) can be interpreted as an integer. Integers are not part of the JSON specification, however if we're forced to use floating point for everything we can lose fidelity, which is detrimental for values likeids. This method will scan through a number to see if it's of the pattern\-?[0-9]+that is to say, if it is just an optional hyphen followed by one or more digits. If this returns true, you might be better off treating this value as an integer rather than a floating point.booleanValue(),value(),numericValue()and the (spec extended)integerValue()can be used to retrieve values for booleans, strings, reals and integers respectively.undecorate()is an important method forJsonReader::Stringvalues because it does in-place removal of quotes and translation of escapes to their actual values. Since it is in place, it has side effects. You should not callvalueType(),isIntegerValue()or other value accessing methods other thanvalue()once this value is called as while they won't crash, they also won't be reliable.context()returns the attachedLexContextso you can do things like get the physical cursor position, which is always slightly ahead of whatread()is reporting. You usually won't need it but it can help when debugging queries.

Navigation

You usually won't be using read() except as a secondary facility to advance the cursor or read a single value. There are methods for actual navigation which can make your life much easier:

skipToField(): This finds the next field in the document with the given name along the given axis. It will not examine the current field - only successive fields. That way you can call it in a loop. It supports the axisJsonReader::Siblingswhich finds sibling fields from the current location,JsonReader::Descendants, which finds descendants from the current location, andJsonReader::Allwhich is a fast, forward searching axis that doesn't care about hierarchy. It's a great way to brute force find all fields with a particular name in the document, and quickly.skipToIndex(): Skips to the given index in the arrayskipSubtree(): Skips the current subtree under the cursor. This is useful to be able to examine the next element in the document that is on the same level of the hierarchy (a sibling)skipToEndObject(): Skips to the end of the current objectskipToEndArray(): Skips to the end of the current array

Besides ease of use, another advantage to using these methods is that they are faster than the equivalent read() calls, and use less RAM. I took great pains to optimize these calls so that they do minimal normalization and no allocation during the scan. They also don't necessarily always do well-formedness checking. I decided the trade off was worth it especially since JSON documents are typically machine generated. The downside is if a document is not well formed, it may either not detect it if you're using skip methods, or it will error later in the scan. Again, I thought this was worth the tradeoff in terms of performance.

The reason I did this, is this is the first phase of the query process - navigating to the general section(s) of the document you want to examine. Get familiar with these methods, particularly skipToField() since this is the preferred method of advancing through a document. We'll be exploring examples later.

Error Handling

Most of the methods return a bool indicating success, but failure doesn't always mean there was an error. For example, if you call skipToField("foo",JsonReader::Siblings) and "foo" is not present, the method will return false, but this is not an error - just a result. Errors are indicated by the parser's nodeState() being JsonReader::Error. lastError() can then be called to get the error code - one of the JSON_ERROR_XXXX constants, or JSON_ERROR_NO_ERROR which indicates no error. An error message can usually be retrieved by getting the error node's value() but the error reporting is complicated and it's not always reliable yet.

In-Memory JSON Trees: JsonTree.h

This is an optional portion of the library. You might never need it, but for more complicated queries, the pull parser can become really difficult and you might one day need the help that a modest in memory tree can bring you in order to pull one off.

Our in memory trees are very simple. There is no hashing so all lookups are linear. The justification is that if you're loading enough JSON into memory where hashing gets you much if any performance gain, you probably are using the library wrong. Don't load objects with 12 fields into memory just so you can get to two of them! We'll get to a better way to handle that.

JSON Elements

An element is a composable unit of a JSON document. It can represent an object, an array, or a scalar value. We can build documents out of these. In the code, all elements, regardless of what they represent are implemented by JsonElement which is a sort of variant that can be any type of element. It should be noted that due to the memory scheme used, JsonElement objects are not efficient to edit. You should not try to use them as some sort of DOM. Load the data you need into them, use them, and then throw them away. Do not load them, and then repeatedly edit them.

An element has a type() and a value which isUndefined(), or a null(), a boolean(), a real(), an integer(), a string(), a pobject(), or a parray().

The type() indicates JsonElement::Undefined, JsonElement::Null, JsonElement::Boolean, JsonElement::Real, JsonElement::Integer, JsonElement::String, JsonElement::Object, or JsonElement::Array.

You've seen the get accessor methods above for getting a typed value from the element. Each of the set accessors is a method of the same name taking a single argument, which is almost always a value of the desired type. For example, myElement.boolean(true) would set the element myElement to a type of JsonElement::Boolean and give it a value of true. The exceptions are pobject(dummy) and parray(dummy) which take a dummy null and just signal to set the appropriate type().

Building objects and arrays is a little more involved. You have to call myElement.pobject(nullptr) and then repeatedly call myElement.addField(...) to add fields to it. You do the same with arrays, except it's parray(nullptr) and addItem(...). Fields and array items are internally stored as linked lists using JsonFieldEntry and JsonArrayEntry structures. You get to the head of the lists through the pobject() and parray() get accessor methods.

Once you have an object, operator[] getter overloads work for JsonElement::Array and JsonElement::Object types, or you can call toString() which allocates a string representation of the (minified) JSON and returns you an char* sz pointer to it.

Managing Memory

Certain JsonElement related methods take a MemoryPool& pool parameter. These methods require memory allocation. The pool is what they will allocate from. We use these MemoryPool objects to directly control where and how our JSON gets allocated, and when it gets freed. This amount of control is a double edged sword, but given this primarily targets low memory environments, it's worth the slight complication. The complication also pays for itself due to extremely high performance memory allocation from those pools.

When we need to do an allocation, we allocate from these pools instead of a raw heap as I alluded to above. Nothing ever gets individually deleted from these pools, but the pools can be recycled in their entirety, invalidating all data in the pool at once. These means that if you use JsonElement's setString() method, a new string will be allocated each time, and the old one will not be deleted. This is why I said editing is inefficient.

We create our pools, usually on the heap, but you can make them on the stack as well. JsonElements can refer to JsonElements in other pools just fine, but it's usually not how you want to do it since you could potentially invalidate one pool with freeAll() and the other pool might still have objects referring to data therein. What we usually do is do a lot of rapid allocations and then free the entire pool shortly thereafter, just as soon as the operation we needed it for is complete. We can then recycle the pool for future operations.

Loading in Memory Documents

If you've included JsonTree.h above JsonReader.h, you will have the ability to parseSubtree()s out of the documents. You can call JsonReader's parseSubtree() method from most points in the document to get JsonElement objects out of them. This can be useful if you're doing complicated queries that involve multiple fields from an object or that must collate data from subobjects or siblings. It's just rough to try to do that with only a pull parser. Believe me, I've tried. That's why I wrote this part of the project. However, while you can simply open a JSON document, and parseSubtree() on the root, that will use more memory than you need to be using, and I didn't really design it for that. If I did for example, I would have hashed the object fields for faster lookup. Let's talk about a better way:

Loading in Memory Documents With Filters

You almost never need the whole document. What you need are certain fields from certain objects, right? Why load them all then? It wastes time, it wastes RAM, and it's not necessarily simpler to code.

parseSubtree() can take a JsonParseFilter structure which can be used to blacklist or whitelist fields. Whitelisting is particularly powerful and is probably the 80% case because it allows you to select known fields and exclude the rest. Blacklisting would mostly be useful if you want to list fields you don't know exist ahead of time, but want to exclude certain fields you know are going to be large, like say a description, or a base64 encoded blob.

You construct a JsonParseFilter with the fields you want to include or exclude, their count, and the type of filter.

A JsonParseFilter with a type of JsonParseFilter::WhiteList can also fill the pvalues member with an array the length of fieldCount to hold returned values for each of the found fields. What I mean is, if you fill this member with an array, an array will be populated with a JsonElement* object that represents the value of each matching field. This means in most cases, your filter can just get you the query results you're after, cutting out any extra steps. This is done with almost no additional performance overhead, so it's a great way to run a query.

A whitelist filter can also contain subfilters. Basically for each whitelisted field, you can apply an additional filter for that field, and so on. Blacklist filters can be children, but cannot use child filters themselves - their pfilters member is ignored. To use a child filter, you must populate the pfilters member with an array the same length as fields/fieldCount with pointers to additional JsonParseFilter structures, which can themselves have children. If one of the entries in your whitelist does not need a filter, that array element can be null. Arrays don't count as a level in the hierarchy so if your object has a field with an array of child objects, you can set the filter for that field and it will apply to the objects in that array.

Learn this. Use it. This is a big part of how you do complicated queries with this library. I was thinking of exposing JSONPath but it would just use more memory, and between this and skipToField() this handles so many use cases that it doesn't seem worth the additional resource requirements and effort.

Loading in Memory Documents With String Pooling

Frequently, JSON data can have a lot of repeating fields. While it's preferable to cut down the number of fields you load in the first place using filtering, sometimes you just need a lot of data. When fields with common names get repeated, it wastes RAM. To solve this, a second, dedicated MemoryPool can be used as a string pool, and passed to parseSubtree(). If one is passed, a remedial "almost" hash table is created in the pool and strings are allocated to that table. Duplicate strings will always return the same pointer. parseSubtree() will allocate field names to this pool if its passed in, and if poolValues is specified, then string values will be pooled as well, although this often doesn't help so it's off by default. Note that this second pool must never be used for anything other than string pooling, although you can reuse the same pool for multiple different documents if you want.

Putting it Together: Executing Complicated Queries Involving In-Memory Trees

Doing this involves several steps. First, you need to allocate the MemoryPool(s) and LexContext with effective capacities. I usually start with a larger value for the capacities and then decrease it after profiling.

Once you have those, prepare the LexContext with the appropriate initialization method, like open() on StaticFileLexContext

Now set up your filter. You're using a filter, right? You should almost always be using a (whitelist) filter. This makes it far easier (and more efficient!) to query the document anyway, by using the pvalues member as described before. Make it happen. Create a filter that will get you the desired fields from your object and subobjects. This filter will be relative to where we navigate in the next step.

Now, initialize a JsonReader with that LexContext and use the reader to navigate to an area of interest. You might (probably) need to set this up so it navigates to several of them in a loop. We assume you're looping here. You'll usually use while(skipToField()) for this, and then follow that with a read() to load the field's value.

Call freeAll() on your primary pool but not on your string pool. This will free and recycle any memory allocated in the pool, and we want to do this before each iteration. If we're using a string pool we keep the string pool between calls since ultimately it benefits us but usually string pooling isn't worth it when we're already filtering down to a few unique fields. You'll almost never actually use it if you're doing queries this way unless you need to load a lot of data during this step - in which case, maybe you should redesign your query?

Now call parseSubtree() and make sure the result is not a nullptr, which indicates failure.

Now, forget about the return value of parseSubtree() for a moment and examine pvalues of your filter structure instead. They should contain the values for the fields you queried for, assuming those fields were present. If they weren't, the value for that field will be a nullptr. If you're using nested whitelists, you can examine those nested filter structures here too. Note that this doesn't really work with arrays since there's nowhere to return multiple values in a pvalues element.

Aaaand Bob's your uncle. Now in that step you can take each of the JsonElement* values from pvalues and use them to your heart's content.

Let's walk through this with real code now.

Coding this Mess

We're going to be performing various queries over some JSON data returned from tmdb.com for an American television show called Burn Notice. The complete information for the 7 season 111 episode series in JSON form is in the neighborhood of 190kB+ of data, pretty printed, with lots of nested arrays, objects and fields, which makes it a pretty good test set for these purposes - you'll find it in data.json included with the project:

...

"homepage": "http://usanetwork.com/burnnotice",

"id": 2919,

"in_production": false,

"languages": [

"en"

],

"last_air_date": "2013-09-12",

"last_episode_to_air": {

"air_date": "2013-09-12",

"episode_number": 13,

"id": 224759,

"name": "Reckoning",

"overview": "Series Finale. Michael must regain the trust of those

closest to him in order to finish what he started.",

"production_code": null,

"season_number": 7,

"show_id": 2919,

"still_path": "/lGdhFXEi29e2HeXMzgA9bvvIIJU.jpg",

"vote_average": 0,

"vote_count": 0

},

"name": "Burn Notice",

"next_episode_to_air": null,

"networks": [

{

"name": "USA Network",

"id": 30,

"logo_path": "/g1e0H0Ka97IG5SyInMXdJkHGKiH.png",

"origin_country": "US"

}

],

"number_of_episodes": 111,

"number_of_seasons": 7,

...Using skipToField()

We'll start with using skipToField() since it's absolutely critical:

bool skipToField(const char* field, int8_t searchAxis,unsigned long int *pdepth=nullptr);fieldis the field name you want to skip to. Currently, there are no wildcard matches - you need to know what you're looking for.searchAxisindicates the direction/path of the search and can beJsonReader::Siblings,JsonReader::Descendants, orJsonReader::All. These are similar to the axis concept in XPath, and JSONPath but they work a little differently when it comes to the details.pdepthis required forsearchAxis=JsonReader::Descendantsand ignored for all other axes. It is a cookie of sorts used to track where you are in the hierarchy when the search starts. You declare anunsigned long int depth = 0;parameter and simply pass its address here for each successive related call toskipToField(). This is so it can track where you started. You don't have to do anything with the variable other than keep it in scope for the duration of the operation - your while loop. I could have made separate methods likeskipToFirstDescendantField(),skipToNextDescendantField()but this seemed a less heavy handed way of accomplishing the same thing.

It returns true if a matching field was found, or false if one was not found, even if it was not found because an error occurred. Due to this potential ambiguity, when you get false, you should check the nodeType() for JsonReader::Error to determine why it didn't succeed. If it's not an error, it means that it couldn't find a matching field. Note that this again, is a forward only parser. With axis JsonReader::All, if you don't find a field, you'll keep scanning until you reach the end, and then you're stuck there, so be careful with it.

Now let's look at some examples:

// we only even need 14 bytes for this!:

// "Burn Notice" plus the null terminator

// In the real world, give it some breathing room

// for Arduino this code is slightly different than

// this which uses standard stdio file op,

// unavailable in the base Arduino SDK

// you'd instead use ArduinoLexContext<14>

// (always static implied) and you'd use it below

// with a Stream derived class like File

StaticFileLexContext<14> lexContext;

...

// open the file

if (!lexContext.open("./data.json")) {

printf("Json file not found\r\n");

return;

}

// create the reader

JsonReader jsonReader(lexContext);

// we start on nodeType() Initial, but that's sort of a pass through node. skipToField()

// simply reads through when it finds it, so our first level is actually the root object

// look for the field named "name" on this level of the hierarchy.

// then read its value which comes right after it.

if ( jsonReader.skipToField("name",JsonReader::Siblings) && jsonReader.read()) {

// deescape the value

jsonReader.undecorate();

// print it

printf("%s\r\n",jsonReader.value());

} else if(JsonReader::Error==jsonReader.nodeType()) {

// if we error out, print it

printf("\r\nError (%d): %s\r\n\r\n",jsonReader.lastError(),jsonReader.value());

return;

}

// close the file

lexContext.close();That's a simple sibling field search. Notice it found the right name field, even though there are several name fields that come earlier in the document. This is the only name field on the root object level of the hierarchy and that's why it chose that - due to JsonReader::Siblings. If we had used JsonReader::All, it would have reported "Matt Nix" rather than "Burn Notice" due to that being the value of the first name field that occurred in the document, even though it's nested under a subobject. We'd get the same result with JsonReader::All as we do in this case with JsonReader::Descendants, because name occurs with "Matt Nix" in a descendant object right at the top of the document.

It may seem like skipToField("myField",JsonReader::Siblings) is a great way to navigate, but there's a serious catch to it having to do with the way the specification for JSON works. Fields can be in any order, according to the spec. That means that some outfit like tmdb.com could decide they want to reorder the fields so they're no longer alphabetical, but most frequently accessed first, for example. Your code has to be able to handle that - essentially fields coming to you in orders you can't rely on. Due to that, if you need to retrieve multiple fields, like episode_number, name and season_number off the same object you can't reliably use skipToField() for that. Which one do you skip to first? That's the question you can't reliably answer because of the spec. Due to this wrinkle, you'll want to use a filtered in-memory tree for that kind of operation. That way, you can load all the fields off an object you need at once and examine them as a unit, which we're going to be doing in a little bit, but before we do, let's look at using the JsonReader::Descendants axis. We're going to be fetching the names of each production company for the show. production_companies is under the root object:

...

"production_companies": [

{

"id": 6981,

"logo_path": null,

"name": "Fuse Entertainment",

"origin_country": ""

},

{

"id": 6529,

"logo_path": null,

"name": "Fox Television Studios",

"origin_country": ""

}

],

...Here's the code for it:

// find the production_companies field on this level of the hierarchy, than read to its value

if (jsonReader.skipToField("production_companies",JsonReader::Siblings) && jsonReader.read()) {

// we want only the names that are under the current object. we have to pass a depth tracker

// to tell the reader where we are.

unsigned long int depth=0;

while (jsonReader.skipToField("name", JsonReader::Descendants,&depth) && jsonReader.read()) {

undecorate(); // take the quotes and escapes out

printf("%s\r\n",jsonReader.value());

}

}Note how we had to pass our "depth tracker" cookie here. This again is used to mark where we currently are so that on successive calls we know to read back to our level on the hierarchy and stop. If you don't pass one, the call will fail with JSON_ERROR_INVALID_ARGUMENT. You can nest these looped calls, but you'll want to use different depth tracker cookies for each if you do. The idea is to scope your depth tracker cookie with the life of your while loop. That will keep you out of trouble, or at the very least into the right kinds of trouble.

Using read()

Now that we've covered that, let's look at using read() and querying the parser. As mentioned, read executes a single step of the parse. You can then use various methods to get the information about the parsing state, like the type of node you're on or what its value is. It's easier to just demonstrate it than explain the entire flow, plus code speaks volumes, so here's code for dumping every single element of a file to the console:

// open the file

if (!lexContext.open("./data.json")) {

printf("Json file not found\r\n");

return;

}

JsonReader jsonReader(lexContext);

// pull parsers return portions of the parse which you retrieve

// by calling their parse/read method in a loop.

while (jsonReader.read())

{

// what kind of JSON element are we on?

switch (jsonReader.nodeType())

{

case JsonReader::Value: // we're on a scalar value

printf("Value ");

switch (jsonReader.valueType())

{ // what type of value?

case JsonReader::String: // a string!

printf("String: ");

jsonReader.undecorate(); // remove all the nonsense

printf("%s\r\n", jsonReader.value()); // print it

break;

case JsonReader::Number: // a number!

printf("Number: %f\r\n", jsonReader.numericValue()); // print it

break;

case JsonReader::Boolean: // a boolean!

printf("Boolean: %s\r\n", jsonReader.booleanValue() ? "true" : "false");

break;

case JsonReader::Null: // a null!

printf("Null: (null)\r\n");

break;

}

break;

case JsonReader::Field: // this is a field

printf("Field %s\r\n", jsonReader.value());

break;

case JsonReader::Object: // an object start {

printf("Object (Start)\r\n");

break;

case JsonReader::EndObject: // an object end }

printf("Object (End)\r\n");

break;

case JsonReader::Array: // an array start [

printf("Array (Start)\r\n");

break;

case JsonReader::EndArray: // an array end ]

printf("Array (End)\r\n");

break;

case JsonReader::Error: // a bad thing

// maybe we ran out of memory, or the document was poorly formed

printf("Error: (%d) %s\r\n", jsonReader.lastError(), jsonReader.value());

break;

}

}

// always close the file

lexContext.close();read() is fast, but it will do well formedness checking and a full parse so it's the slowest way to navigate. Unless you're intending to write a document validator, use other options when they are available to you.

Using parseSubtree()

I saved this for last since it actually encompasses a lot more than the name suggests. Hidden in this function are powerful querying and filtering capabilities, and it's our secret sauce once we've navigated to within the general ballpark of our data using skipToField().

Using it requires additional setup because the objects it creates use a specialized allocator called a MemoryPool. You'll have to declare one of these before you use this function, and you'll have to profile to figure out what size you need, because it depends on the query. Luckily, the same query should always be about the same size, give or take for the varying lengths of actual field values, because the fields will always be known and the same, at least if you're using a whitelist filter. Make it bigger than you need, profile it, then trim to fit (always leaving some breathing room because data lengths can vary).

Luckily, it's not that hard to do. Declaring one is as simple as declaring a LexContext. Pick the one you need - usually StaticMemoryPool

StaticMemoryPool<1024> pool; // 1kB of data. That's often plenty.

// Profile, you might need a quarter of it.The biggest dictator of the number of bytes you'll need is the number of fields you'll need so choose your whitelist filter with that in mind. Obviously, this applies to nested objects as well so nest your filters!

In the following code, we skip to every episodes field in the document (similar to $..episodes in JSONPath), and once we find them for each one we run a filtered parseSubtree() that gives us the season_number, the episode_number, and the name. We then use those 3 fields values we got back from the filter to fill in a format string that gives us the episode in S##E## Name format which I chose because it is a common way to format media filenames. Here's the code:

LexContext<256> lexContext;

StaticMemoryPool<256> pool;

...

// open the file

if (!lexContext.open("./data.json")) {

printf("Json file not found\r\n");

return;

}

// create a JSON reader

JsonReader jsonReader(lexContext);

// prepare a simple filter

const char* fields[] = {"season_number","episode_number","name"};

JsonParseFilter filter(fields,3,JsonParseFilter::WhiteList);

// create some room to hold our return values

JsonElement* values[3];

filter.pvalues=values;

// for tracking

size_t max = 0;

size_t episodes=0;

// yeah, we're timing it for fun

milliseconds start = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

// skip to each field in the document named "episodes" regardless of where.

// JsonReader:All cuts through the hierarchy so running it repeatedly starting

// from the root is similar to doing $..episodes in JSONPath:

// (jsonReader.read() advances to the field's value, in this case an array)

while (jsonReader.skipToField("episodes", JsonReader::All) && jsonReader.read() ) {

// if it's an array let's move to the first element using read():

if(JsonReader::Array==jsonReader.nodeType() && jsonReader.read()) {

// while we still have more array elements...

while(JsonReader::EndArray!=jsonReader.nodeType()) {

// now parse that array element (an episode)

// parse with a filter in place.

// We only want season_number,episode_number and name:

JsonElement *pr= jsonReader.parseSubtree(pool,&filter);

if(nullptr==pr) {

printf("\r\nError (%d): %s\r\n\r\n",jsonReader.lastError(),jsonReader.value());

return;

} else {

// since we've whitelisted, the API filled in the values for us.

// It's easy to retrieve them

// normally you'd want to do checking here top.

printf("S%02dE%02d %s\r\n",

nullptr!=filter.pvalues[0]?(int)filter.pvalues[0]->integer():0,

nullptr!=filter.pvalues[1]?(int)filter.pvalues[1]->integer():0,

nullptr!=filter.pvalues[2]?filter.pvalues[2]->string():"(not found)");

// increase the episode count

++episodes;

}

// track the max amount of data we end up using at one time

if(pool.used()>max)

max=pool.used();

// recycle this for the next query

pool.freeAll();

}

} else if(JsonReader::Error==jsonReader.nodeType()) {

break;

}

}

if(JsonReader::Error==jsonReader.nodeType()) {

// report the error

printf("\r\nError (%d): %s\r\n\r\n",jsonReader.lastError(),jsonReader.value());

return;

}

// stop the "timer"

milliseconds end = duration_cast<milliseconds>(system_clock::now().time_since_epoch());

// report the statistics

printf("Max used bytes of pool: %d\r\nScanned %d episodes

and %llu characters in %d milliseconds\r\n",(int)max,(int)episodes,

lexContext.position()+1,(int)(end.count()-start.count()));

// always close the file

lexContext.close();The code is a bit thrown together but servicable. You can see we're even timing the whole mess. On my rickety old PC with an HDD, I get this output:

...

S07E03 Down Range

S07E04 Brothers in Arms

S07E05 Exit Plan

S07E06 All or Nothing

S07E07 Psychological Warfare

S07E08 Nature of the Beast

S07E09 Bitter Pill

S07E10 Things Unseen

S07E11 Tipping Point

S07E12 Sea Change

S07E13 Reckoning

Max used bytes of pool: 193

Scanned 112 episodes and 191288 characters in 4 millisecondsYour modern machine probably does even better. My ESP32 IoT device does significantly worse, but it's on a half speed SPI SD card reader using the default SD library so that's probably where most of the churn is. It's 200kB of data after all.

Scanned 112 episodes and 191288 characters in 2429 millisecondsBuffering the LexContext might improve this at the expense of more memory but I need to investigate to find where most of the time is taken. Again, I'm betting it's the I/O to the SD card. The big deal here is 193 bytes from a capacity of 256. That's all the pool space it took to perform this query, and that's brilliant when you're dealing with little devices. It doesn't count the LexContext size (256 bytes), the member variables on the JsonReader or the local stack space used to make the call. Maybe one day I'll look at that but that's a deep dive. In terms of what we know about, that's about one half kB to perform the operation, and at least on a PC it does so very quickly to boot.

I haven't really shown you how to use the string pooling, but honestly I only added it to enable loading larger documents with a lot of repetitive data somewhat efficiently. It's for corner cases, not usual use. Almost always, the solution involves loading less data in the first place - maybe shift more of your query outside of the in-memory document and schlep it onto the reader.

That said, this should be enough to get you started. I hope you enjoy this code.

Future Directions

I'm debating about adding a JSONPath mechanism for querying the in-memory documents. The main reason I don't want to is it encourages in-memory queries which is precisely the thing this library aims to cut down on and for what? More code? One good reason I can think of doing it is it potentially adds opportunities for doing more sophisticated data extraction than the filter allows for. Another reason would be to facilitate collating data from different levels of the hierarchy more readily. The chief downside is many queries require gobs of memory to store the resultsets and then requery off of those ad nauseum. It's just not set up for tiny machines. Still part of me thinks it should be up to the end developer to craft their JSONPath queries to use as little memory as possible, so the real question is if I did it, would anyone use it well? Or it would it just be abused generally?

I'm considering moving StaticLexContext's memory to use a MemoryPool, but it would complicate using LexContext slightly, and the pool would have to be dedicated. It couldn't be used for anything else at the same time. One good reason to do it would be to facilitate easier profiling. Right now even though the rule is simple, it's hard to know how much space LexContext needed to actually perform the operation. It's kind of a trial and error affair. For example, in the above code, I know the LexContext required less than 256 bytes to run that query, but how much less I have no idea without trying it. It could be that 64 satisfies it. Remember, it's the length of the longest value you need to examine (quotes and escapes included) plus the null terminator. That's easy to understand, but hard to actually figure out.

Eventually I might try to make this work on little 8 and 16 bit processors running the base Arduino SDK where things like

In general, this code is a work in progress, so keep checking back with my GitHub.

History

- 16th December, 2020 - Initial submission

- 22nd December, 2020 - Massive Update - new GitHub repo and new article (article available as of the 23rd)