Parse Anything with Parsley: A Different Approach

Updated on 2019-12-31

Use the Parsley compositional parser generator to parse a complicated grammar with backtracking.

Update: Bugfixes to grammars (minor changes - not reflected in article), and somewhat improved code generation. Addition of shared attribute which marks production functions public. Grammar is slightly more complex but captures comments now.

Article 2 with more bugfixes and the next step is here.

Introduction

Originally, Parsley simply generated recursive descent LL(1) based parsers. While it was very flexible for what it was, and had numerous features to reach past the limits of LL(1), eventually I considered the idea of using "sub-parsers" and "sub-grammars" to allow for simple and complex composition parsing, which in the end, makes it much easier to parse. It also allows me to factor the parsing into separate units, both resolving conflicts, extending the flexibility of the end parser(s) and breaking large grammars into maintainable chunks.

Please note that Parsley is a work in progress, so it's still a bit rough and cranky, but just give it some love and some patience and it will be fine.

Slang is a CodeDOM compliant subset of C# I use in my code generation tools. It allows you to write CodeDOM constructs using C# instead of building them by hand. There's more information about Slang at the link.

Slang is a CodeDOM compliant subset of C#

Originally, I wrote the Slang parser using a hand built recursive descent parsing technique similar to that used by Microsoft's production C# compiler. However, maintenance is a bear, and even though the source is partitioned across several files, it quickly becomes overwhelming. Unlike Microsoft, I don't have a team available to delegate building the different parts of the parser to.

To that end, I needed a tool that would help build me a parser. I considered using ANTLR but I don't like its API or grammar format, and the grammar I found for C# generated an 800K+ source file for parsing C#6 - which wouldn't parse! No thanks. Moving on, I found a grammar for Coco/R as well, but it uses so much embedded state to resolve its parse that it's hard to follow, leading me to the same maintenance and comprehension issues as my hand written parser! For various reasons, I'm sticking to LL parsing versus LR parsing so tools like Gppg are off the table, even if they could parse C#. If you don't know the difference between LL and LR parsing, it doesn't matter here, but LL parses top down, using the grammar to direct the parse, while LR parses from bottom up, using the next input to direct the parse. The first one uses the grammar to find valid inputs, the second uses inputs to try to match it to grammar. The second one doesn't lend itself to intuitive syntax directed actions.

Background

This is why I built Parsley. While I built it specifically for this task, it's a great general purpose parser generator, if still a bit immature. It lets you intermingle hand written parsing code with generated parsing code which is perfect for parsing really complicated grammars and grammars that are not LL(1) - that is, LL grammars that require more than one symbol of lookahead. This way, I could describe the LL(1) "easy" constructs in XBNF, like:

Expression<start, follows="semi rparen rbracket comma">=AssignExpression;

RelationalExpression = TermExpression { ("<"|"<="|">"|">=") TermExpression };

EqualityExpression = RelationalExpression { ("=="|"!=") RelationalExpression };

BitwiseAndExpression = EqualityExpression { "&" EqualityExpression };

BitwiseOrExpression = BitwiseAndExpression { "|" BitwiseAndExpression };while building the harder stuff using our own code. For things that Parsley can't generate for - the tougher constructs - I used Parsley's "virtual" feature which allows us to write parts of the grammar using a combination of C# or Slang (C# subset). Additionally, we can use Parsley's compositional grammar features to parse constructions which may otherwise conflict with the larger grammar. Furthermore, we can make use of Parsley's automatic backtracking feature which makes it easier to parse ambiguous constructions. Parsley uses trial and error to handle automatic backtracking, but we'll explore all of this below.

Prerequisites

This article already covers so much that I am punting the backgrounder on using Parsley to my article on it. It's strongly recommended that you read it first, as I won't be covering the basics again here. This grammar here is about two hundred lines long. This is about using all the features of Parsley in an advanced, and very complicated grammar.

This article also assumes you understand the basics of recursive descent parsing, which is a very basic parsing technique that lends itself to hand-rolled parsers. Parsley is somewhat rare in that it generates these recursive descent routines while most parsers that are generated use a table driven approach. Parsley takes full advantage of this routine based approach to allow you to do things that aren't readily accomplished using table driven parsers, like completely taking over the parse. Understanding the fundamentals of how they are built is critical here, but very easy to learn. Many of you have probably written one at some point, as it's the most intuitively logical way to parse something.

You really should have used parser generators before to fully understand this article, but I'll try to make those parts of the article that require it not necessary to understand the whole. Just absorb what you can.

It is also recommended that you understand Slang, the CodeDOM compliant subset of C# that I use in for code generation because Parsley uses it, you use it in your grammars, and it's the reason Parsley exists in the first place - to replace Slang's current and somewhat dodgy hand written parser which is hard to maintain and has horrible error reporting. Parsley was built to help ease those things.

Building this Mess

Parsley is provided as part of my larger build pack - a suite of tools for making build tools. It uses itself to build itself meaning you'll have to build it two times in release for the locking errors to go away, and then for everything to run. If you do not do this, your solution will not build.

Conceptualizing this Mess

We've got two major things we're juggling here, the grammar itself, and the generated code that comes from it.

We use the generated code for debugging if we have to. It's easy to set breakpoints in the generated parser code because despite the size, it's all exquisitely compartmentalized. Each non-terminal production/parsing construct has its own function and all the generated functions work one of two ways depending on whether they backtrack or not. However, both are easy to follow, and despite two different implementations, their interfaces are precisely the same.

Refresher on Advanced Parsley

Every parse function is named after the non-terminal production it represents. Terminals do not have or need parse functions. The signature of each Parse function is as follows: ParseNode ParseXXXX(ParserContext context), where XXXX is the name of your non-terminal production/parsing construct. This is especially important to remember in areas where we take over the parse using the virtual feature on our non-terminal productions, because we delegate to these functions. Otherwise, for codeless parse operations - hopefully most of them!, you don't need to worry about any of that.

When using special virtual non-terminal productions in the grammar, you declare them like this:

MyNonTerminal<firsts="MyFirst1 myFirst2 ..."> { // code here } : where { // code here }You don't always need the where clause, but you do need to declare firsts in most cases so that the parser can find your production. firsts is composed of a space delimited list of grammar symbols (either terminal or non-terminal) which can appear at the start of your production. Without this, the parser won't know to evaluate your virtual construction, and will never call it. In rare cases, this is okay, as you might be using this virtual only from other virtuals which call it in code. However, if the grammar is to "see" it, you need to declare your firsts. This constitutes the overwhelming majority of cases. If this is not clear, don't worry, as we'll be getting to it with real world code later.

I noted the signature of the parse routines above as ParseNode ParseXXXX(ParserContext context), where XXXX is the name of production. You will be implementing this yourself in a virtual production. See the context argument and the ParseNode return value? Thankfully, it's not that much to deal with. This constitutes your essential "API" inside each virtual parse function. It's your responsibility to use context and the available ParseXXXX() functions to parse your tokens and return a ParseNode from it, handling all errors yourself, and dealing with terminals that appear in your input. This was covered in the Parsley article I linked to above, so for further reading, please see toward the end of that article for a refresher.

On using where: Where does two things; it allows you to override a conflict in the grammar and specify your own narrowing constraint on your production. Your responsibility is to use context to examine the input, and return a boolean value indicating whether your production passed/qualified/will be evaluated. If you simply return true, from a regular, non-virtual production, it will enable automatic backtracking, which we'll eventually cover. In the previous Parsley article, we went over using where to distinguish between an identifier and a keyword. In the where routine, note that context will not advance the main cursor, so you can read ahead with it without interfering with the parse. You cannot modify the real ParserContext from inside a where clause. Note that backtracking is never automatic for virtual productions, because the entire code for the parsing method is left to you.

New Features in Parsley

There are some new features which we use in the updated codebase provided with this article. We can manipulate the parse table using the firsts and follows to customize our parsing. We went over firsts above briefly, but it can be applied to any non-terminal production, not just virtuals. What it actually does is add to the existing list of what can occur as the first item in your production. You usually won't need to extend firsts except in the case of virtual productions as above. However, whenever something only appears in code, Parsley will need some help integrating it into the parse, because it can't discern the grammar from the code alone. firsts is particularly handy here.

The other parse table manipulation attribute is follows. Follows allows you to specify what can come after a particular production. Note that this does not mean the final symbol in the production. Rather, it means anything that can follow it. The parse table needs this information in order to generate the code, and is automatically gleaned from the grammar in almost all cases, but sometimes we may want to extend the list of possibilities of what can follow a member for our own purposes. One of those purposes might be providing an alternate ending condition for parsing from a subparser/subgrammar.

NamespaceName<follows="lbrace">= Identifier { "." Identifier };Here, we've told the parser that NamespaceName could be followed with an opening brace {. The reason for this is in our particular case, NamespaceName only ever gets called in the grammar as part of a using directive, so the parser doesn't know that lbrace can follow it, because it can't trace the grammar to figure it out. So far it only knows about ; because it appears in the UsingDirective production (omitted here). The other case it's called is when parsing a namespace which it does in code using a virtual production. When used in a namespace, the name can be followed by an opening brace. Since that rule is only enforced in code, we need to hint at the grammar that it can stop at an opening brace too. Remember you can specify multiple symbols delimited by space, but you cannot use literal symbol values like ")". There are other cases where you might use this that we'll cover later. This is easy to understand if you understand first and follows sets in LL parsing, but it isn't required to know in order to use this. If you extend firsts or follows with something that is already present, it will simply produce a warning about a duplicate rule.

There are additional attributes introduced, such as abstract, and virtual but you shouldn't need them. However, they can be specified for clarity.

One that you might need is dependency. This attribute should be applied to any non-terminal production that is only referred to in code, so the grammar doesn't eliminate it. If you need to do the same thing for a terminal production, set the terminal attribute.

One of the ways you can find that you need to add a follows to a production is using the debugger. When it syntax errors, find the method that raised context.Error() and find out what the list of expected symbols was - it will be shown through the various comparisons in the if block. One of the if blocks should probably be returning a node with new ParseNode[0] as its children - at least if it's part of a right associative list, as is often is. You need to add a value to the comparison in that if essentially. How you do it is to find (using the call stack if you must) the nearest non-terminal production that appears in the grammar, so like above, we'd crawl up the stack past the factored non-terminals until we find ParseNamespaceName() in the call stack. Then we want to add lbrace to the follows sets to place that if condition in there, so that's what we do, and wham, there's your parse.

People experienced with parsers and generators may be wondering why we need to give the grammar all these hints. The reasons are virtual productions and @imported sub-parsers. The parser cannot trace through the other parser's grammar without adding all of its rules to the master grammar, potentially creating conflicts and ultimately defeating the purpose of the sub-parser. The parser also cannot trace code so it has no idea how your code in virtual productions is using the other parse functions (the other productions essentially). So when you need to use these features, you need to give Parsley a little bit of help sometimes.

On Using Parsley Effectively for Large Grammars

Parsley is not fast enough to be feasible for large, language independent grammars. The time it takes to translate from one programming language to another is simply too much work. However, Parsley is plenty fast in C# only mode with the /fast option. It is highly recommended that you use this with large grammars.

The other option that /fast opens up is the ability to use partial classes with your parser. This means you can move almost all of the code out of your grammar and into a regular C# partial class file that aliases your parser class.

You really should when working with a lot of code. Slang is still experimental, and the error reporting is dodgy - which is one of the reasons I'm making a new parser here! Keeping it in a partial class, similar to using a "codebehind" file in ASP.NET allows you to keep your major code in a separate file, where it really should go, making the grammar clearer, and the code easier to debug. Plus you get Intellisense in Visual Studio (or the equivalent in MonoDevelop) that way.

This technique does not work with syntax directed actions that generate Evaluate() methods due to the use of macros, which require post-processing. In a future version, I'll allow codebehind for syntax directed actions, but it won't provide macro support.

It's not a bad idea to prototype even small grammars with /fast as it will shorten build times.

Note that when using /fast, it's a good idea, but not required to use a Gplex lexer (/gplex option) with Parsley. This allows for faster generation and more features, at the cost of a small amount of performance, and language independence. You'll usually need the extra features, particularly the unicode support, and the rolex lexer spec generation in Parsley isn't currently up to date with the latest features.

Using /fast and /gplex together should cut your build times to something very reasonable, even for really large grammars, but your code is C# only.

The other thing about large grammars is it might be easier to build them by factoring into subparsers/subgrammars. These are exactly like regular parsers and grammars save for two differences: Their start production has a follows attribute which specify its alternate terminating condition, if necessary which would normally be end of stream, and the implementation of the top level public parse methods is somewhat different in that it checks for extra mess at the end and throws if it finds it. This is due to the addition of follows elements which may cause early termination.

We use the @import feature in XBNF to import a sub-parser, which is simply another XBNF file, maybe with its own imports and so on like C++ headers. Each import path is relative to the document that imported it. Note that duplicate references to the same filename are okay as only the first will be loaded. Also URLs might work but it hasn't been tested, and relative paths will not work with urls.

@import "SlangExpression.xbnf"Note that any \ characters must be escaped.

The @import directives must appear before any productions. Lexer order is determined by import order so you may need to use a negative priority attribute on one or more of your terminals if you need them to appear last. Note that there's a bug (needs implementation) right now with /ifstale, where it won't trace your imports, so you'll have to dirty the master file for it to know it needs to rebuild the grammar.

All grammars that are imported plus the master grammar create one file, with one class per grammar. All of the grammars share the same lexer, which is a combination of the terminals in all of the grammars. All grammars share the same overarching symbol table. Constants will only be added to the class if they are used in the grammar, or if the abstract attribute is set, or the terminal attribute is set. Productions that are only used in the code must be hinted with one of the above if they are to create constants for that parser class. Note that you cannot declare duplicate productions in the different grammars, so only one grammar can control the attributes for a particular terminal or non-terminal production. A downside of this is that in the code for the grammar sometimes you cannot tease a constant out, because a terminal is defined in another grammar, but you don't use that terminal in your grammar, only in code. In this case, you'll have to refer to the constant on the other parser class (created with the other grammar) when you use it in code. Sorry. I know it's vaguely annoying but I haven't figured out a solution other than tracing the code or dumping all the constants on to each parser class, but this created huge classes when the grammars were large so I decided against it.

Each of the grammars is its own parser, with its own productions and rules. This means that each is a black box to the other. The upshot is, two parsers can have similar rules without conflicting. This is very helpful for segregating, say C# expressions and C# statements, which occasionally look very much alike to a parser. This is the compositional parsing feature of Parsley. You cannot specify the class name of an imported parser. It will always be derived by taking the name of the start production in your grammar. Remember, the parsers share the same symbol table and lexer/tokenizer despite being different parsers with different "parse tables"

What we're going to do to parse Slang is use a combination of these features in order to turn the language into a parse tree. We'll finally explore that in the next section.

Using this Mess

First, let's parse some expressions!

// Slang.xbnf

// This is the XBNF spec for Slang Expressions (gplex version - unicode enabled)

// Slang is a CodeDOM compliant subset of C#

// Expressions

Expression<start, follows="semi rparen rbracket comma">=AssignExpression;

RelationalExpression = TermExpression { ("<"|"<="|">"|">=") TermExpression };

EqualityExpression = RelationalExpression { ("=="|"!=") RelationalExpression };

BitwiseAndExpression = EqualityExpression { "&" EqualityExpression };

BitwiseOrExpression = BitwiseAndExpression { "|" BitwiseAndExpression };

AndExpression= BitwiseOrExpression { "&&" BitwiseOrExpression };

OrExpression= AndExpression { "||" AndExpression };

AssignExpression= OrExpression { ("="|"+="|"-="|"*="|"/="|"%="|"&="|"|=") OrExpression };

TermExpression= FactorExpression { ("+"|"-") FactorExpression };

FactorExpression= UnaryExpression { ("*"|"/"|"%") UnaryExpression };

MemberFieldRef = "." Identifier;

MemberInvokeRef = "(" [ MethodArg { "," MethodArg } ] ")";

MemberIndexerRef = "[" Expression { "," Expression } "]";

MemberAnyRef<collapsed> = MemberFieldRef | MemberInvokeRef | MemberIndexerRef ;

MethodArg = [ outKeyword | refKeyword ] Expression;

TypeRef = Type;

IntrinsicType= boolType |

charType |

stringType |

floatType |

doubleType |

decimalType |

sbyteType |

byteType |

shortType |

ushortType |

intType |

uintType |

longType |

ulongType |

objectType ;

TypeBase = (identifier { "." identifier }) | IntrinsicType;

// the first follows is for fields and methods, second two

// are for base types and custom attributes, respectively

Type<follows="Identifier lbrace rbracket">=TypeElement { TypeArraySpec };

TypeElement = TypeBase [ TypeGenericPart ];

TypeGenericPart= "<" [ Type { "," Type } ] ">";

TypeArraySpec= "[" { TypeArraySpecRank } "]";

TypeArraySpecRank = comma;

// parse casts manually because they're weird. it's just easier

CastExpression<virtual, firsts="lparen"> {

return _ParseCastExpression(context);

}

// much easier to code this as a virtual and parse manually

ArraySpec<virtual,firsts="lbracket"> {

return _ParseArraySpec(context);

}

NewExpression= newKeyword TypeElement ( NewObjectPart | NewArrayPart );

NewObjectPart<collapsed>= "(" [ Expression { "," Expression } ] ")";

NewArrayPart<collapsed>= ArraySpec;

// this is necessary so we can get Parsley to generate a

// method called ParseTypeCastExpressionPart() which we use

// when resolving casts

TypeCastExpressionPart<dependency, collapsed>= Type ")";

ArraySpecExpressionList<dependency ,collapsed>= Expression { "," Expression } "]";

ArrayInitializer<dependency>= "=" "{" [ Expression { "," Expression } ] "}";

SubExpression<collapsed> = "(" Expression ")" : where { return !_IsCastExpression(context);}

// use the where clause to override first first conflict.

UnaryExpression= ("+"|"-"|"!") UnaryExpression | ("++"|"--") PrimaryExpression |

SubExpression | PrimaryExpression : where { return true;}

FieldRef<dependency>= Identifier { MemberAnyRef };

// This is a virtual but here's the basic description

//TypeOrFieldRef = ( Identifier | IntrinsicType )

//{ MemberAnyRef } | (TypeRef) {MemberAnyRef}+ : where {return true;}

TypeOrFieldRef<virtual,firsts="Identifier IntrinsicType">

{ return _ParseTypeOrFieldRef(context); }

PrimaryExpression=

TypeOrFieldRef { MemberAnyRef } |

verbatimStringLiteral { MemberAnyRef } |

characterLiteral { MemberAnyRef } |

integerLiteral { MemberAnyRef } |

floatLiteral { MemberAnyRef } |

stringLiteral { MemberAnyRef } |

boolLiteral { MemberAnyRef } |

nullLiteral |

CastExpression |

typeOf "(" Type ")" { MemberAnyRef } |

nameOf "(" Identifier ")" { MemberAnyRef } |

defaultOf "(" Type ")" { MemberAnyRef } |

NewExpression { MemberAnyRef } |

thisRef { MemberAnyRef } |

baseRef { MemberAnyRef } ;

// Identifier exists as a non-terminal solely to attach a semantic predicate to identifiers.

// we collapse it because we don't need it in the final parse tree but in the grammar

// we have to refer to "Identifier" instead of identifier or verbatimIdentifer to apply

// this constraint.

Identifier<collapsed> = verbatimIdentifier |

identifier : where { return !Keywords.Contains(context.Value); }

// be careful about the order of the terminals.

// remember they have priorities in the final lexer

verbatimIdentifier='@(_|[[:IsLetter:]])(_|[[:IsLetterOrDigit:]])*';

// begin keywords

outKeyword="out";

refKeyword="ref";

typeOf="typeof";

nameOf="nameOf";

defaultOf="default";

newKeyword="new";

stringType="string";

boolType="bool";

charType="char";

floatType="float";

doubleType="double";

decimalType="decimal";

sbyteType="sbyte";

byteType="byte";

shortType="short";

ushortType="ushort";

intType="int";

uintType="uint";

longType="long";

ulongType="ulong";

objectType="object";

boolLiteral = "true|false";

nullLiteral = "null";

thisRef = "this";

baseRef = "base";

verbatimStringLiteral='@"([^"|""])*"';

// bury this

identifier<priority=-100>='(_|[[:IsLetter:]])(_|[[:IsLetterOrDigit:]])*';

stringLiteral='"([^\\"\'\a\b\f\n\r\t\v\0]|\\[^\r\n]|

\\[0-7]{3}|\\x[0-9A-Fa-f]{2}|\\u[0-9A-Fa-f]{4}|\\U[0-9A-Fa-f]{8})*"';

characterLiteral='[\u0027]([^\\"\'\a\b\f\n\r\t\v\0]|\\[^\r\n]|\\[0-7]{3}|\\

x[0-9A-Fa-f]{2}|\\u[0-9A-Fa-f]{4}|\\U[0-9A-Fa-f]{8})[\u0027]';

lte="<=";

lt="<";

gte=">=";

gt=">";

eqEq="==";

notEq="!=";

eq="=";

inc="++";

addAssign="+=";

add="+";

dec="--";

subAssign="-=";

sub="-";

mulAssign="*=";

mul="*";

divAssign="/=";

div="/";

modAssign="%=";

mod="%";

and="&&";

bitwiseAndAssign="&=";

bitwiseAnd="&";

or="||";

bitwiseOrAssign="|=";

bitwiseOr="|";

not="!";

lbracket="[";

rbracket="]";

lparen="(";

rparen=")";

lbrace="{";

rbrace="}";

comma=",";

colonColon="::";

dot=".";

integerLiteral = '(0x[0-9A-Fa-f]{1,16}|([0-9]+))([Uu][Ll]?|[Ll][Uu]?)?';

floatLiteral= '(([0-9]+)(\.[0-9]+)?([Ee][\+\-]?[0-9]+)?[DdMmFf]?)|

((\.[0-9]+)([Ee][\+\-]?[0-9]+)?[DdMmFf]?)';

lineComment<hidden>='\/\/[^\n]*';

blockComment<hidden,blockEnd="*/">="/*";

whitespace<hidden>='[ \t\r\n\v\f]+';That's not too bad. You can see we have a few virtual productions that forward to methods not shown here. They are in the "codebehind" partial class. We also have a where constraint on Identifier to make sure it isn't a keyword. We also have assigned -100 to identifier's priority attribute. This is important to make sure we prioritize keywords in the tokenizer/lexer. Don't confuse this with Identifier, which unlike identifier is non-terminal.

TypeCastExpressionPart is somewhat interesting in that it's a Type followed by a closing parenthesis. We use it to match part of a cast. Namely, the part in bold, here: ( string ) foo; Doing it this way is a little trick to tell the parser that a Type can be followed by a closing parenthesis. The other way to do this, which results in slightly different behavior, is to specify follows="rparen" on Type to tell the parser the same thing. The difference is the former will "eat" the closing parenthesis, whereas the latter will not. In the end, since it's collapsed, it really doesn't matter how we did it, because the child nodes will simply be appended to the parent anyway, eliminating this part while picking up the underlying type and parenthesis. Either way, it's not exactly intuitive at first, yet this is a pattern that crops up in any grammar, for most any parser generator, so it's good to understand how it works. Most parser generators require you to do it the first way, but Parsley gives you the second way as well. I often use the latter technique in this grammar, but the above is an exception.

We also have a where clause on Unary expression that evaluates to true. Because of this, we won't see the conflicts between this and the CastExpression underneath the PrimaryExpression that would otherwise crop up, but it does force the parser to create backtracking code here, even though we won't be using it here this case - although we do use it in other cases. That's okay, as it doesn't really impact the parse. The other option would be to parse it ourselves using a virtual production. Note as I said, that we do backtrack, but later when we check whether an expression is a cast or not - see below:

We have a where clause on SubExpression which is because a cast and an expression in parentheses look pretty much the same. For example, (Foo.Bar) could be a parenthesized expression (A reference to Foo's Bar member) or it might be a cast to type Foo.Bar. We can't know for sure without continuing further and looking for the Expression that follows the closing parenthesis. That's what the where clause does in this case. Remember that inside a where, context never changes the primary cursor, so we can parse along it as much as we need to without impacting the rest of the parse.

Let's see some of the code we delegated to:

static bool _IsCastExpression(ParserContext context)

{

context = context.GetLookAhead(true);

try

{

if (lparen != context.SymbolId)

return false;

context.Advance();

ParseNode type = ParseTypeCastExpressionPart(context);

ParseNode expr = ParseUnaryExpression(context);

return true;

}

catch (SyntaxException)

{

}

return false;

}First, note that we replace context with a lookahead context. We didn't need to as long as this method was only called inside a where clause. However, I didn't want it to be brittle, and creating another lookahead cursor is pretty cheap. Parsley was designed for lookahead. Use it as much as you need to. There are performance disadvantages to backtracking no matter what you do, because you're examining the same text more than once. It's the nature of the algorithm, but given that disadvantage, Parsley does lookahead cursors themselves very efficiently using the code at that link, and lookahead on lookahead is only negligibly less performant than not wrapping again. The bottom line is this is extra, usually, but it doesn't matter. We pass true when we get our lookahead simply to avoid having to call EnsureStarted(). It just starts it for us.

Next inside the try block, we look for a ( and if we don't find it, we immediately return false. After that, we advance over the parenthesis and delegate to the TypeCastExpressionPart that's in the grammar - we explored it just above. Finally, we parse our expression, delegating once again, to the grammar's generated parse method for that. If neither raises a syntax error, it's a cast. Otherwise, by process of elimination, it's a subexpression.

We don't actually need the type or expr variables since they just get tossed, but I added them for clarity so you could see the return values were getting tossed. This method makes it so when we encounter a ( in our expressions, we can tell the difference between a subexpression and a cast expression.

Meanwhile _ParseCastExpression() is simply doing the same thing, but building a parse node or raising errors as necessary.

We run into issues parsing things like foo[10][][,,,] and while it's possible to build this using grammar constructs, we run into an ambiguity with the first element, which might not have an expression inside it. Basically, the grammar can't distinguish foo[10][][,,,] because the first element can have an expression but might not, and the remaining elements can't. Because of this, we can't tell if the first element is even specified. While it should be possible to make this work LL(1) - I think - it's just easier to write a virtual production for it, as shown here:

static ParseNode _ParseArraySpec(ParserContext context)

{

int line = context.Line;

int column = context.Column;

long position = context.Position;

if (lbracket!=context.SymbolId)

context.Error("Expecting start of array spec");

ParseNode lb = null;

ParseNode expr = null;

ParseNode init = null;

ParserContext pc = context.GetLookAhead(true);

pc.Advance();

if (rbracket!=context.SymbolId)

{

lb = new ParseNode(lbracket, "lbracket", context.Value,

context.Line, context.Column, context.Position);

context.Advance();

expr = ParseArraySpecExpressionList(context);

return new ParseNode(ArraySpec, "ArraySpec",

new ParseNode[] { expr }, line, column, position);

}

else

{

expr = ParseTypeArraySpec(context);

init = ParseArrayInitializer(context);

return new ParseNode(ArraySpec, "ArraySpec",

new ParseNode[] { expr, init }, line, column, position);

}

}Once again, a lot of our work is simply delegated to existing productions like ArraySpecExpressionList. We just have to parse the different pieces and compose them into a new ParseNode. See how we're using a little bit of lookahead here? That's so we can solve that ambiguity I mentioned above.

And the worst beast of them all, is TypeOrFieldRef. This is an incredibly ambiguous parse, with convoluted logic. I don't know how to make any parser generator parse this cleanly, if at all, but with Parsley, again, we have virtual productions to give us full control when we need it. Basically, we have to distinguish between the type reference Foo.Bar.Baz

For now, in the grammar above, our start production is Expression which will allow you to parse expressions. We'll be using this as a subparser in the next section as we add more to the overall grammar, but for now, it's top level.

You can use it like this:

var test = "(object)4*(2+1)";

var tokenizer = new SlangTokenizer(test);

_WriteTree(SlangParser.Parse(tokenizer),Console.Out);That's pretty easy. You'll note that the expression above parses even though it won't compile. That's fine since, it is not a parser's job to validate the correctness of your code, only the correctness of your syntax. It doesn't know anything about object except that it's an intrinsic type. A compiler would validate this code only after the code was parsed.

Roll up your sleeves, because we're just getting started.

Here's our next bit:

// Slang.xbnf

// This is the XBNF spec for Slang Statements (gplex version - unicode enabled)

// Slang is a CodeDOM compliant subset of C#

@import "SlangExpression.xbnf";

// Statements

// must reference a symbol here rather than ";" or XBNF thinks it's a terminal

// TODO: test terminal=false attribute setting in Parsley

EmptyStatement= semi;

VariableDeclarationStatement= (varType | Type) Identifier [ "=" Expression ] ";";

ExpressionStatement=Expression ";" : where { return _WhereExpressionStatement(context); }

// enable automatic backtracking here

VariableDeclarationOrLabelOrExpressionStatement<collapsed>=

VariableDeclarationStatement|

ExpressionStatement |

LabelStatement

: where { return true; }

ElsePart<collapsed> = "else" StatementOrBlock;

IfStatementPart<collapsed>= "if" "(" Expression ")" StatementOrBlock ;

// backtracking seems to be choosing incorrectly on trailing else here

// make it virtual

//IfStatement=IfStatementPart [ ElsePart ]

IfStatement<virtual, firsts="ifKeyword"> { return _ParseIfStatement(context);}

GotoStatement= "goto" identifier ";";

// we already check to disambiguate in ExpressionStatement,

// so all we do here is override the first first conflict

LocalAssignStatement<dependency, collapsed>=ExpressionStatement |

VariableDeclarationStatement : where { return true; }

// for trailing part of for first line:

ForIncPart<dependency, collapsed> = [ Expression ] ")";

// "for" "(" (LocalAssignStatement | EmptyStatement)

// (Expression ";" | EmptyStatement) ForIncPart StatementOrBlock

ForStatement<virtual, firsts="forKeyword"> { return _ParseForStatement(context); }

: where {return true;} // enable automatic backtracking

WhileStatement= "while" "(" Expression ")" StatementOrBlock;

ReturnStatement= "return" Expression ";";

ThrowStatement= "throw" [ Expression ] ";";

TryStatement= "try" StatementBlock ( { CatchClause }+ [ FinallyPart ] | FinallyPart );

CatchClause= "catch" "(" Type [ Identifier ] ")" StatementBlock;

FinallyPart<collapsed> = "finally" StatementBlock;

// look for : to disambiguate between this and a primary expression

LabelStatement= identifier ":" : where {

context.Advance();

return colon==context.SymbolId;

}

StatementOrBlock = (Statement | StatementBlock);

Statement<start>=

EmptyStatement |

VariableDeclarationOrLabelOrExpressionStatement |

IfStatement |

GotoStatement |

ForStatement |

WhileStatement |

ReturnStatement |

GotoStatement |

TryStatement ;

StatementBlock= "{" {Statement} "}";

ifKeyword="if";

gotoKeyword="goto";

elseKeyword="else";

// have to hint forKeyword as terminal because it never appears except

// in code or attributes

forKeyword<terminal>="for";

throwKeyword="throw";

whileKeyword="while";

returnKeyword="return";

tryKeyword="try";

catchKeyword="catch";

finallyKeyword="finally";

semi=";";

varType="var";

colon=":";

directive<hidden,blockEnd="\n">='#[A-Za-z]+';That's a pretty little mess, isn't it? Luckily, we've been able to define most of it in the grammar rather than code. Note that we're now using @import to import the expression grammar we build above.

I probably didn't need to make ForStatement virtual, but it was easy, and otherwise the grammar for a for is complicated due to factoring out conflicts with local assign. Note that we have defined constructs like LocalAssignStatement because we can just delegate to the parser for that rather than parsing it ourselves. We make judicious use of the parser's built functions like ExpressionStatement to make parsing that pretty painless:

static ParseNode _ParseForStatement(ParserContext context)

{

var line = context.Line;

var column = context.Column;

var position = context.Position;

var children = new List<ParseNode>();

if (forKeyword != context.SymbolId)

context.Error("Expecting for");

children.Add(new ParseNode(forKeyword, "forKeyword", "for",

context.Line, context.Column, context.Position));

context.Advance();

if (lparen != context.SymbolId)

context.Error("Expecting ( in for loop");

children.Add(new ParseNode(lparen, "lparen",

"(", context.Line, context.Column, context.Position));

context.Advance();

if (semi == context.SymbolId)

{

children.AddRange(ParseEmptyStatement(context).Children);

}

else

children.Add(ParseLocalAssignStatement(context));

if (semi == context.SymbolId)

{

children.AddRange(ParseEmptyStatement(context).Children);

}

else

{

children.AddRange(ParseExpressionStatement(context).Children);

}

children.AddRange(ParseForIncPart(context).Children);

children.Add(ParseStatementOrBlock(context));

return new ParseNode(ForStatement, "ForStatement",

children.ToArray(), line, column, position);

}You can see it's all pretty straightforward, with much of the work delegated to generated parse routines like ParseExpressionStatement().

The where on LabelStatement is trivial. It simply looks for an identifier (not Identifier, which would include things like @namespace) followed by ":" to distinguish - trivial with our lookahead.

ExpressionStatement is just a little trickier because we have to make sure we don't confuse it with a VariableDeclarationStatement, which can look similar to an expression at the beginning.

Now things get trickier, and it's a good thing Parsley was designed for intermingling hand written code as we'll be using even more of it in the next section.

Types and members are incredibly ambiguous in C# and thus Slang. You have possible leading custom attributes followed by member attributes finally followed by something that might be a field, property, constructor, method, or nested type. By the time you figure out what it is, you're well into the parse. We take over parsing members ourselves. We also take over much of type declaration parsing because it gets ambiguous as well, particularly where leading custom attributes are concerned. It should be noted that LL(k) parsers like ANTLR should be able to resolve this without needing too much massaging, but Parsley is LL(1). I will make it LL(k) or even LL(*) eventually but I don't know how yet.

@import "SlangStatement.xbnf";

@import "SlangExpression.xbnf";

// Custom Attributes

CustomAttribute= TypeBase [ "(" [ CustomAttributeArgList ] ")"];

//CustomAttributeArgList<virtual, firsts="Identifier Expression">

//{ return _ParseCustomAttributeArgList(context); }

CustomAttributeArgList = CustomAttributeArg { "," CustomAttributeArg };

CustomAttributeArg = Identifier "=" Expression | Expression : where { return true; }

CustomAttributeTarget= (assemblyKeyword | returnKeyword) ":";

CustomAttributeGroup<follows="Member TypeDecl">=

"[" [ CustomAttributeTarget ] CustomAttribute { "," CustomAttribute } "]";

CustomAttributeGroups<dependency,

follows="Member TypeDecl Identifier namespaceKeyword">= { CustomAttributeGroup }+;

// Types

// since it's only used in code, we need to give it follows

TypeAttributes<follows="classKeyword enumKeyword structKeyword

interfaceKeyword partialKeyword">= { publicKeyword | internalKeyword | privateKeyword };

EnumPart<collapsed> = "{" EnumFields "}" | ":" Type "{" EnumFields "}";

EnumFields<collapsed>= [ EnumField { "," EnumField } ];

EnumField= Identifier "=" Expression ;

Where<dependency,follows="lbrace">= "where" WhereClauses;

WhereClauses<dependency,virtual,firsts="Identifier"> { return _ParseWhereClauses(context);}

: where { return !ExpressionParser.Keywords.Contains(context.Value); }

WhereClause<dependency,follows="lbrace">=

Identifier ":" WhereClausePart { "," WhereClausePart };

WhereClausePart= (Type | newKeyword "(" ")" );

BaseType<dependency,collapsed,follows="comma whereKeyword lbrace">= Type;

BaseTypes<abstract>;

TypeDeclPart<virtual,firsts="colon whereKeyword lbrace", follows="rbrace">

{ return _ParseTypeDeclPart(context);}

TypeParams<follows="colon whereKeyword lbrace"> =

[ "<" [ CustomAttributeGroup ] Identifier { "," Identifier } ">" ];

// we narrow the attributes this accepts as a base in our parse routine for parse member

// TODO: do it for non-nested types

Enum<dependency>= MemberAttributes "enum" Identifier EnumPart;

Struct<dependency>= MemberAttributes "struct" Identifier TypeParams TypeDeclPart;

Class<dependency>= MemberAttributes "class" Identifier TypeParams TypeDeclPart;

Interface<dependency>= MemberAttributes "interface" Identifier

[ "<" TypeParams ">" ] TypeDeclPart;

TypeDecl<start,virtual,follows="TypeDecl usingKeyword namespaceKeyword rbrace lbracket",

firsts="TypeAttributes structKeyword classKeyword enumKeyword

interfaceKeyword partialKeyword lbracket"> { return _ParseTypeDecl

(context,false,null,context.Line,context.Column,context.Position,null); }

// Members

MemberAttribute<collapsed>= newKeyword | constKeyword | publicKeyword |

protectedKeyword | internalKeyword | privateKeyword | staticKeyword | overrideKeyword;

MemberAttributes<dependency> = { MemberAttribute };

// private implementation types are really difficult to parse so we use a virtual

// for it. The trouble is in the ambiguity because the type leads the identifier, so

// it's hard to know where the type ends and the identifier begins.

// note that we restrict the Identifier below,

// by copying it's where clause.

PrivateImplementationType<virtual,firsts="Identifier">

{ return _ParsePrivateImplementationType(context); }

: where { return !ExpressionParser.Keywords.Contains(context.Value); }

// need the follows because it's only referenced by code

// the grammar can't trace it

MethodParamList<dependency,follows="rparen">= [ MethodParam { "," MethodParam } ];

MethodParam= [ outKeyword | refKeyword ] Type Identifier;

ParamList<dependency>= [ Param { "," Param } ];

Param= Type Identifier;

// property accessors are weird for the parser because one

// can be optional, but only one of each may be specified

// and in any order. This is easier with a virtual

PropertyAccessors<virtual,firsts="PropertyGet PropertySet">

{ return _ParsePropertyAccessors(context); }

PropertyGet<dependency>= "get" ( StatementBlock | ";" );

PropertySet<dependency>= "set" ( StatementBlock | ";" );

ConstructorChain = ( "base" | "this" ) "(" ParamList ")";

// below we add rbrace to the follows sets for each to

// production below to allow member decls to be inside a

// { } block (for type decls)

Constructor<abstract>;

Method<abstract>;

Property<abstract>;

Event<abstract>;

Field<abstract>;

// methods/properties and fields are also tough to disambiguate.

// we could have used automatic backtracking for below but the error reporting

// is just bad with it right now. Better to write a bunch of code because the

// errors in the code inside methods and such were bubbling up here and

// interfering with error reporting

Member<virtual,follows="rbrace",

firsts="lbracket MemberAttributes Type eventKeyword Identifier">

{

return _ParseMember(context);

} : where { return true; } // ignore conflicts here. handled by the routine

Members<virtual,firsts="Member"> { return _ParseMembers(context); }

: where { return true; } // method handles first-first conflicts

assemblyKeyword="assembly"; // for assembly targets

voidType<terminal>="void"; // basically for methods

partialKeyword="partial";

classKeyword="class";

enumKeyword="enum";

structKeyword="struct";

interfaceKeyword="interface";

getKeyword="get";

setKeyword="set";

eventKeyword="event";

publicKeyword="public";

privateKeyword="private";

protectedKeyword="protected";

internalKeyword="internal";

staticKeyword="static";

// specify terminal here since this is only reference in code

// we need to make sure it generates a constant

abstractKeyword<terminal>="abstract";

constKeyword="const";

overrideKeyword="override";

whereKeyword="where";Didn't I warn you? We delegate to methods a lot here. There's simply too much ambiguity for the generated methods to handle cleanly. Parsing things like the private implementation type (IEnumerator IEnumerable.GetEnumerator()) get really difficult for a parser to handle. Also, property accessors are complicated because they can appear in any order, and there must be only one of each, maximum. This can be hard to describe in a grammar. It's doable but it forces you to write out every possible case. Note we've got a few abstract non-terminal productions. We'll need the constants that create in order to build parse nodes with them.

Private implementation types are one of the trickier ones to parse, and use a technique I employ as a last resort:

static ParseNode _ParsePrivateImplementationType(ParserContext context)

{

var l = context.Line;

var c = context.Column;

var p = context.Position;

var pc2 = context.GetLookAhead(true);

// here's a trick. We're going to capture a subset of tokens and then

// create a new ParserContext, feeding it our subset.

var toks = new List<Token>();

while (EosSymbol != pc2.SymbolId &&

(identifier2 == pc2.SymbolId ||

verbatimIdentifier == pc2.SymbolId ||

ExpressionParser.dot == pc2.SymbolId))

{

toks.Add(pc2.Current);

pc2.Advance();

}

if (EosSymbol == pc2.SymbolId)

pc2.Error("Unexpected end of file parsing private implementation type");

if (2 < toks.Count)

{

// remove the last two tokens

toks.RemoveAt(toks.Count - 1);

toks.RemoveAt(toks.Count - 1);

// now manufacture a comma token

// to get ParseType to terminate

var t = default(Token);

t.SymbolId = comma;

t.Value = ",";

t.Line = pc2.Line;

t.Column = pc2.Column;

t.Position = pc2.Position;

toks.Add(t);

var pc3 = new ParserContext(toks);

pc3.EnsureStarted();

var type = ExpressionParser.ParseType(pc3);

// advance an extra position to clear the trailing ".", which we discard

var adv = -1;

while (adv < toks.Count)

{

context.Advance();

++adv;

}

return new ParseNode(PrivateImplementationType,

"PrivateImplementationType", new ParseNode[] { type }, l, c, p);

}

return new ParseNode(PrivateImplementationType,

"PrivateImplementationType", new ParseNode[0], l, c, p);

}Remember that ParserContext drives an instance implementing IEnumerable

I won't include ParseMember() because it's long, but the main reason for that, and for the virtualness of this production in the first place, is error handling. Member declarations have a number of constraints on them that are not easy to specify in a grammar. Abstract for example, tells us a method cannot have a method body. Furthermore, errors in statements were bubbling up and getting confusing so this is better.

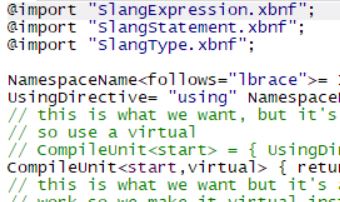

Finally, we have our top level grammar, which again, delegates to code a lot, this time to resolve ambiguities:

@import "SlangExpression.xbnf";

@import "SlangStatement.xbnf";

@import "SlangType.xbnf";

NamespaceName<follows="lbrace">= Identifier { "." Identifier };

UsingDirective= "using" NamespaceName ";";

// this is what we want, but it's really ambiguous

// so use a virtual

// CompileUnit<start> = { UsingDirective } { Namespace | TypeDecl }

CompileUnit<start,virtual> { return _ParseCompileUnit(context); }

// this is what we want but it's a lot of

// work so we make it virtual instead

// Namespace= "namespace" NamespaceName "{" {UsingDirective } {TypeDecl} "}"

// rbrace is enforced in code but we specify it here for readability

Namespace<virtual,firsts="namespaceKeyword", follows="rbrace">

{ return _ParseNamespace(context);}

// make sure this gets a constant as it's only used in code

namespaceKeyword<terminal>= "namespace";

usingKeyword= "using";This actually isn't too bad to parse, it's just a touch difficult for LL(1), so this is where virtual productions and delegation to generated methods really shines:

static ParseNode _ParseNamespace(ParserContext context)

{

var line = context.Line;

var column = context.Column;

var position = context.Position;

var children = new List<ParseNode>();

if (namespaceKeyword != context.SymbolId)

context.Error("Expecting namespace");

children.Add(new ParseNode(namespaceKeyword, "namespaceKeyword",

context.Value, context.Column, context.Line, context.Position));

context.Advance();

children.Add(ParseNamespaceName(context));

if (lbrace != context.SymbolId)

context.Error("Expecting { in namespace declaration");

context.Advance();

while (usingKeyword == context.SymbolId)

{

children.Add(ParseUsingDirective(context));

}

while (EosSymbol != context.SymbolId && ExpressionParser.rbrace != context.SymbolId)

{

children.Add(TypeDeclParser.ParseTypeDecl(context));

}

if (rbrace != context.SymbolId)

context.Error("Expecting } in namespace declaration");

context.Advance();

return new ParseNode(Namespace, "Namespace", children.ToArray(), line, column, position);

}It's all very straightforward. Note that we don't allow nested namespaces here. This is deliberate, as the CodeDOM does not support them.

And now let's use it. The demo just parses itself and dumps the result to the screen:

stm = File.OpenRead(@"..\..\Program.cs");

var tokenizer = new SlangTokenizer(stm);

var pt = SlangParser.Parse(tokenizer);

_WriteTree(pt, Console.Out);This will spit a nasty mess at your console window representing the parse tree.

Now we can parse 95% of Slang. What's left are comments and directives, but we'll get to that in the next article.

In a follow up article, we will use Parsley to render our parse tree into a CodeDOM Abstract Syntax Tree.

History

- 31th December, 2019: Initial submission

- 1st January, 2020: Update