Pck/LALR(1): An LR Parsing Algorithm

Updated on 2019-08-14

An LALR(1) parsing algorithm as part of Pck

Introduction

Well, I finally did it. LALR(1) parser support is now in PCK.

Background

This background assumes at least a passing familiarity with LL(1) parsing. If you need to develop that, you can fiddle around with my tutorial on creating an LL(1) parser. This also assumes some small familiarity with PCK.

tutorial on creating an LL(1) parser PCK

LALR(1) parsing is a form of parsing that works almost "inside out" compared to LL(1) parsing; it builds the tree from the leaves to the root, guiding the parse using the input tokens. Contrast this with LL(1) parsers, which build the tree from the root down to the leaves, using the grammar to guide the parse. In the LALR(1)/LR case, the input tells us what rule to use which the parser compares to the grammar. In the LL(1)/LL case, the grammar tells us what rule to use, which the parser compares to the input.

Sound confusing? It is, a little at first. Luckily, the particulars of the LALR(1) algorithm aren't critical to using it. If it were, most people would be out of luck!

That having been said, we have to start somewhere. Here's the upshot of LALR(1) parsing compared to LL(1) parsing:

- LALR(1) parses a much larger subset of CFG grammars. It's significantly more powerful than LL(1) in terms of what it can recognize.

- LALR(1) can be left recursive or right recursive. Left recursion is encouraged even, since it doesn't take as much stack space.

- The grammars don't require factoring before they can be used

Here are the downsides of LALR(1) parsing:

- The learning curve is slightly higher than using an LL(1) parser, as it's more complicated.

- The algorithm to generate the tables is next to impossible for mortals to comprehend, no matter how many comments there are.

- It doesn't lend itself to natural tree traversal. Instead, it builds trees from the bottom up.

- Error recognition isn't quite as soon as it is in LL(1) due to the way the algorithm works.

Here's my recommendation, given the above: Use an LL(1) parser if you can, or an LALR(1) parser only if you need the extra parsing power that comes with it. PCK provides both.

Other parser generators that use the LALR(1) algorithm include YACC and Gold Parser.

Using the Code

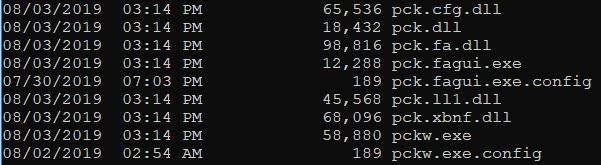

First, we need to build the code before we can use it. Get pckw (and the supporting assemblies) into your path and let's get started.

Create an XBNF grammar file:

expr = expr "+" term | term;

term= term "*" factor | factor;

factor= "(" expr ")" | int;

add= "+";

mul= "*";

lparen= "(";

rparen= ")";

int= '[0-9]+';Convert it to a PCK specification:

pckw xlt expr.xbnf expr.pckNow take the pck specification and use it to generate a tokenizer/lexer:

pckw fagen expr.pck ExprTokenizer.cs /namespace DemoFinally, take the same pck specification file and use it to generate a LALR(1) parser:

pckw lalr1gen expr.pck ExprParser.cs /namespace DemoNow include those files in your project, and reference the Demo namespace. Add a reference to the assembly pck. Add the following code somewhere, probably in your Main() routine if this is a console app:

var parser = new ExprParser(new ExprTokenizer("3*(4+7)"));That creates a new parser and tokenizer over the expression 3*(4+7).

If you want streaming access to the pre-transformed parse tree, you can call the parser's Read() method in a loop, very much like Microsoft's XmlReader:

while(parser.Read())

{

switch(parser.NodeType)

{

case LRNodeType.Shift:

case LRNodeType.Error:

Console.WriteLine("{0}: {1}",parser.Symbol,parser.Value);

break;

case LRNodeType.Reduce:

Console.WriteLine(parser.Rule);

break;

}

}However, that doesn't take advantage of trimming or transformation on the parse tree. It's also harder to use in many situations that a parse tree itself.

Fortunately, it's really easy to get a parse tree:

var tree = parser.ParseReductions(); // pass true if you want the tree to be trimmed.

Console.WriteLine(tree);That returns a node with other nodes as children, representing the parse tree. Each node has all the information about the parsed element. Nodes that were collapsed in the grammar are not in the parse tree. Nodes that were hidden are not in the parse tree unless ShowHidden is true on the parser.

You can also create parsers at runtime, like you can with the LL(1) parser. You don't have to generate code.

To do so, you'll have to reference the pck, pck.cfg, pck.fa and pck.lalr1 assemblies

// we need both a lexer and a CfgDocument.

// we read them from the same file.

var cfg = CfgDocument.ReadFrom(@"..\..\..\expr.pck");

var lex = LexDocument.ReadFrom(@"..\..\..\expr.pck");

// create a runtime tokenizer

var tokenizer = lex.ToTokenizer("3*(4+7)", cfg.EnumSymbols());

// create a parser

var parser = cfg.ToLalr1Parser(tokenizer);This code does the equivalent of the generated code, without actually generating any code. It can take some time to generate the LALR(1) tables and the FA tokenizer, so pre-generating is recommended. Furthermore, from the above, the set up is obviously a bit more complicated.

History

- 14th August, 2019 - Initial submission