Rolex: Unicode Enabled Lexer Generator in C#

Updated on 2023-11-20

Generate fast, easy to use lexers/scanners in major .NET languages

Introduction

Lexical analysis is often the first stage of parsing. Before we can analyze a structured document, we must break it into lexical elements called tokens or lexemes. Most parsers use this approach, taking tokens from the lexer and using them as their elemental input. Lexers can also be used outside of parsers, and are in tools like minifiers or even simple scanners that just need to look for patterns in a document, since lexers essentially work as compound regular expression matchers.

Rolex generates lexers to make this process painless and relatively intuitive, both in terms of defining them and using them. The code Rolex generates uses a simple but reliably fast DFA algorithm. All matching is done in linear time. There are no potentially quadratic time expressions you can feed it since it doesn't backtrack. The regular expressions are simple. There are no capturing groups because they are not needed. There are no anchors because they complicate matching, and aren't very useful in tokenizers. There are no lazy expressions, but there is a facility to define multicharacter ending conditions, which is 80% of what lazy expressions are used for.

The main advantage of using Rolex is speed. The generated tokenizers are very fast. The main disadvantage of using Rolex, aside from a somewhat limited regular expression syntax, is the time it can take to generate complicated lexers. Basically, you pay for the performance of Rolex upfront, during the build.

Update: Replaced FA engine with a faster variant in order to speed up code generation, and some bug fixes. Removed the "prototype" option.

Update 2: Improved performance of FA engine even further and added externaltoken feature.

Update 3: Simplified DFA representation - flattened to an array of ints. Added the ability to render DFA and NFA graphs of the lexer using GraphViz.

Update 4: Added graph output, fixed bug in code generation, and improved output.

Conceptualizing this Mess

The Rolex Command Line Interface

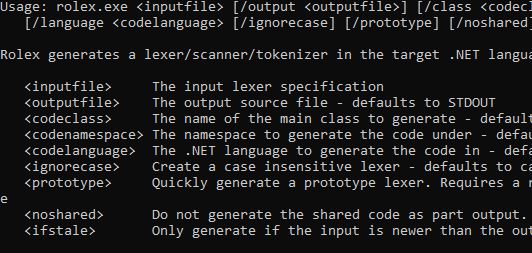

Using Rolex is relatively straightforward. Here is the usage screen:

Usage: rolex.exe <inputfile> [/output <outputfile>] [/class <codeclass>]

[/namespace <codenamespace>] [/language <codelanguage> [/external <externaltoken>]

[/ignorecase] [/noshared] [/ifstale] [/nfagraph <dfafile>] [/dfagraph <nfafile>]

[/graph <graphfile>] [/dpi <dpi>]

Rolex generates a lexer/scanner/tokenizer in the target .NET language

<inputfile> The input lexer specification

<outputfile> The output source file - defaults to STDOUT

<codeclass> The name of the main class to generate - default derived from <outputfile>

<codenamespace> The namespace to generate the code under - defaults to none

<codelanguage> The .NET language to generate the code in - default derived from <outputfile>

<externaltoken> The namespace of the external token if one is to be used - default not external

<ignorecase> Create a case insensitive lexer - defaults to case sensitive

<noshared> Do not generate the shared code as part of the output - defaults to generating the shared code

<ifstale> Only generate if the input is newer than the output

<staticprogress> Do not use dynamic console features for progress indicators

<nfafile> Write the NFA lexer graph to the specified image file.*

<dfafile> Write the DFA lexer graph to the specified image file.*

<graphfile> Write all the individual rule DFAs to a graph.*

<dpi> The DPI of any outputed graphs - defaults to 300.*

* Requires GraphViz to be installed and in the PATHinputfile(required) - indicates the lexer specification file to generate from. These are described below.outputfile- indicates the output code file to generate to or defaults tostdout.codeclass- indicates the name of the generated tokenizer class. By default, it is derived from theoutputfile.codelanguage- indicates the language to generate the code for. This is based on theoutputfileextension if it was specified, or defaults to C#.codenamespace- indicates the namespace to render the code under. Defaults to no namespace.ignorecase- indicates that the entire specification should be treated as case insensitive unlessignoreCase=falseis specified on one of the rules.noshared- indicates that the shared dependency code should not be generated. By default, Rolex generates all dependency code as part of the tokenizer output file. If you're generating multiple tokenizers, you only want one copy of the shared code, so simply specifynosharedfor the second tokenizer and onward to avoid getting compile errors from duplicated code.externaltoken- indicates that Rolex should use a provided token in the given namespace instead of generating its own. Some parsers provide their own token struct. Defaults to generating its ownTokenstruct.ifstale- indicates that the generation should not occur unlessoutputfileis older thaninputfile. This can be useful as part of the pre build step so that the output isn't rebuild unless the input has changed. This is very useful especially for complicated lexer specs that take a long time to generate.nfagraph- generates a graph of the NFA state machine for the lexer. Primarily for debugging expressions.dfagraph- generates a graph of the DFA state machine for the lexer.graph- generates a graph of every rule, including block ends. Can be large.dpi- the DPI of any generated graphs - defaults to 300.

The Rolex Lexer Specification

The lexer specification was designed primarily to be machine generated by other tools, like Parsley However, it's easy enough to use by hand if you aren't afraid of regular expressions.

It is a line based format where each line is formed similar to the one that follows:

ident<id=1,ignoreCase>= '[A-Z_][0-9A-Z_]*'or more generally:

identifier [ "<" attributes ">" ] "=" ( "'" regexp "'" | "\"" literal "\"" )Each attribute is an identifier followed optionally by = and a JSON style string, boolean, integer or other value. If the value isn't specified, it's true meaning ignoreCase above is set to true. Note how literal expressions are in double quotes and regular expressions are in single quotes.

There are a few available attributes, listed as follows:

id- Indicates the non-negative integer id associated with this symbolignoreCase- Indicates that the expression should be interpreted as case-insensitivehidden- Indicates that this symbol should be passed over by the lexer and not reportedblockEnd- Indicates a multi-character ending condition for the specified symbol. When encountered, the lexer will continue to read until theblockEndvalue is found, consuming it. This is useful for expressions with multiple character ending conditions like C block comments, HTML/SGML comments and XML CDATA sections. This can be a regular expression (single quotes) or a literal (double quotes).

Rules can be somewhat ambiguous in terms of what they match. If they are, the first rule in the specification is the rule that is matched, yielding a set of rules prioritized by id (lowest first) and otherwise by document order.

The regular expression language supports basic non-backtracking constructs and common character classes, as well as [:IsLetter:]/[:IsLetterOrDigit:]/[:IsWhiteSpace:]/etc. that map to their .NET counterparts. Make sure to escape single quotes since they are used in Rolex to delimit expressions.

The Generated Code API

Rolex exposes generated tokenizers using a simple API. Each tokenizer is a class with each symbol declared as an integer constant on that class. The tokenizer when constructed, takes an IEnumerator

Let's put what we've covered together to get some lexing going on. First, let's make a spec file, Example.rl:

id='[A-Z_a-z][0-9A-Z_a-z]*'

int='0|\-?[1-9][0-9]*'

space<hidden>='[\r\n\t\v\f ]'

lineComment<hidden>= '\/\/[^\n]*'

blockComment<hidden,blockEnd="*/">= "/*"Above, we've defined id and int, and the rest are hidden whitespace and comments.

Now, we need to cajole some code for it out of Rolex:

Rolex.exe Example.rl /output ExampleTokenizer.cs /namespace RolexDemoAfter including ExampleTokenizer.cs in our project, we can use it like this:

var input = "foo bar/* foobar */ bar 123 baz -345 fubar 1foo *#( 0";

var tokenizer = new ExampleTokenizer(input);

foreach(var tok in tokenizer)

{

Console.WriteLine("{0}: {1} at column {2}", tok.SymbolId, tok.Value,tok.Column);

}Which will spit this to the console:

0: foo at column 1

0: bar at column 4

0: bar at column 20

1: 123 at column 24

0: baz at column 28

1: -345 at column 32

0: fubar at column 37

1: 1 at column 43

0: foo at column 44

-1: * at column 48

-1: # at column 49

-1: ( at column 50

1: 0 at column 52The underlying algorithm that makes it work is ridiculously simple. It is a DFA based algorithm constructed using a standard powerset construction technique, modified to use ranges of characters at a time instead of dealing with them one at a time so that it can handle Unicode. Minimization is based on the Hopcroft algorithm (formerly it was based on the Huffman algorithm). I had previously had almost everything except this powerset construction technique using ranges but not here, and it was choking on Unicode. I previously thought it wasn't feasible to do until I saw that some other libraries out there - namely "brics" in Java and its C# port "Fare" were using that technique, but their implementations weren't helpful to me because our code was far too different. I finally worked it out myself. I thought. Just knowing it was possible I guess was enough for me to solve it. This was after years. But then I found my solution wasn't working! Finally, I pored over the Fare code, and I gleaned enough of how it worked that I was able to create a similar method with my code. I've included the copyright notice in the single source file it impacts. At least it's done. I considered using Fare in Rolex but investigating it, it looks like it doesn't handle Unicode surrogate characters at all, and the error handling was bad, even by my relaxed standards, so I went ahead with my own implementation. Fare might be slightly faster for large state machines, and I know it's significantly faster when dealing with large character sets but it cheats, and doesn't work for surrogate values. Rolex's regex engine is UTF-32 internally, so it doesn't have this problem, but it takes longer to generate the tables as a result.

Anyway, problem solved, my sexier solutions like Lexly, my Pike VM based lexer can't compete with good old DFA in raw performance, no matter how many optimizations I make. DFAs aren't cool tech. They aren't little bytecode interpreters or anything. They're boring, mostly. But they do the job, and quickly, and that's what we want.

Lexly, my Pike VM based lexer little bytecode interpreters

As I mentioned, the main disadvantage, other than taking longer to generate the code for, is that they can't readily support complicated constructs like anchors, or lazy matching or capturing groups without "hacking" the DFA algorithm. For tokenizers, these features don't matter. Lazy matching might, but Rolex offers an alternative.

The tradeoff is worth it in speed. Rolex can lex about 10 times as fast as an NFA based Lexly lexer, and about twice as fast as a hand tuned DFA based Lexly lexer simply because it doesn't have to host a complicated virtual machine to run it.

It still uses Deslang/Slang technology to render the code but I've separated it from the Build Pack, and simply included the deslang.exe tool in the solution folder to satisfy the pre-build step. It is responsible for turning the contents of the Export folder into the Deslanged.cs code, which contains all the CodeDOM representations of the code files in that folder. Be aware that the files in that folder are not full C#, but rather Slang, a CodeDOM compliant C# subset. If you modify them, you can break things if you don't know how to use it.

History

- 25th January, 2020 - Initial submission

- 4th February, 2020 - Fixed bug in FA.Parse()

- 11th February, 2020 - Improved generation speed, fixed one more bug in FA.Parse()

- 25th February, 2020 - Improved generation speed, added "externaltoken" feature

- 18th November, 2023 - Simplified tables, added options, minor bugfixes

- 20th November, 2023 - Minor bugfix, added options, improved code generation