Using the New ESP32 LCD Panel API With htcw_gfx and htcw_uix

Updated on 2023-03-20

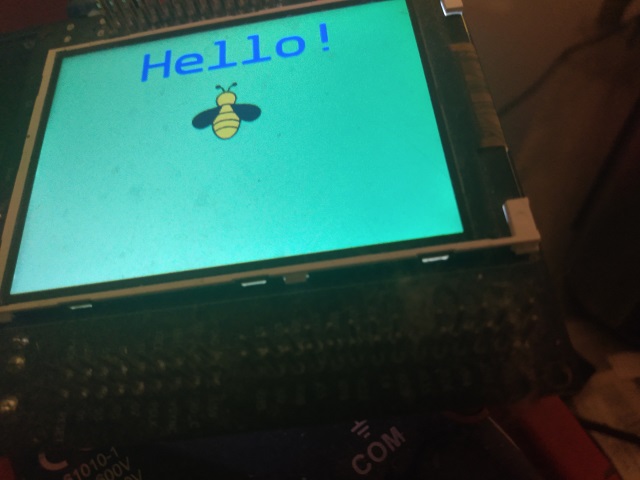

Combine some great technologies for rich, responsive IoT user interfaces on the ESP32

Introduction

With this article, I'll be introducing several technologies that can be used together to create maintainable and responsive user interfaces on the ESP32 family of MCUs.

- The new ESP LCD Panel API introduces an efficient way to communicate with LCD devices, but brings some challenges along with it.

- htcw_gfx provides a rich set of drawing operations in any specified bitmap format, but by itself has no way to communicate directly to an LCD without some kind of driver, and is primarily stateless, which doesn't work well with the way the ESP LCD Panel API operates - at least without help.

- htcw_uix is a fledgling UI/UX library I primarily created to overcome the challenges introduced by the LCD Panel API. It however, also provides a flexible and maintainable way to create screens for IoT devices.

It should be noted that htcw_gfx and htcw_uix are not tied to any particular IoT platform, and in fact, will run on a PC even. One of the reasons we need the ESP LCD Panel API is to cover that last mile of getting pixels to the display, which is platform specific.

For more information on the challenges the ESP LCD Panel API introduces, and how htcw_uix overcomes them, see this article. Rather than cover them again, I'll offer you that prior article, which if anything, is a precursor to this one. This code in fact, builds on the code in previous article.

We kill several birds with a single project here, and it runs on six different devices, taking advantage of any input peripherals that come installed with each device.

Prerequisites

- You'll need VS Code with PlatformIO installed.

- You'll need a ESP Display S3 Parallel w/ Touch, a Lilygo TTGO T1 Display, a ESP_WROVER_KIT 4.1, an ESP Display S3 4" w/ Touch, a M5Stack Core2, or a M5Stack Fire.

Note: The project behaves slightly differently on each device due to each device having different hardware. For example, the ESP_WROVER_KIT 4.1 has no inputs, the TTGO T1 has two physical buttons, and the ESP Display S3 has a capacitive touch screen. To experience the whole project, you'd need several devices.

Programming Style

Like the STL, htcw_gfx makes heavy use of generic programming in order to provide the features it does in a flexible way. This requires understanding and using templates, and type aliases such as using and typedef.

htcw_uix is more OOP style, with virtual classes and binary interfaces, but still uses templates fundamentally to indicate the native pixel format and palette, if any.

The ESP Panel API meanwhile, is straight C and at a fairly low level.

This may be unfamiliar to greener programmers who cut their teeth on the Arduino framework which tends to shield developers from this stuff. I can't really help you to learn all this in this article, but it may be helpful to pick up a copy of Accelerated C++ by Andrew Koenig and Barbara Moo. It's mercifully brief, and teaches C++ the "right way", such as there is one.

Understanding this Mess

To use the ESP LCD Panel API effectively, you need to be able to redraw parts of the screen on demand - whenever they change, and you can't draw directly to the display. Instead, you draw to a bitmap and then send that to the display. This means that basically you need some kind of rendering framework that can track dirty rectangles and invoke drawing code whenever it needs to update part or all of the screen. I covered the approach we will be using in the previous article I linked to in the introduction, for further reading. We'll be using htcw_uix to handle the higher level widget/control library, and the rendering of them, which itself uses htcw_gfx to handle the actual drawing operations.

The other major thing we'll be covering is the ESP LCD Panel API itself, as using it comes with a significant learning curve, especially when you have to provide your own driver for a display controller it doesn't support intrinsically. I've provided three - one for the ILI9341 used in the ESP_WROVER_KIT, one for the ILI9488 used in the ESP Display S3, and the ILI9342 used in the M5Stack devices. The ST7789 for the TTGO T1 Display is already shipped with the ESP-IDF. The ST7701 driver ships with my lcd_init.h file.

Note on the framework used: I chose the Arduino framework for this project, but all three of these APIs are useable from within the ESP-IDF, and make a great choice for a user interface on a framework where those options are limited.

htcw_uix

This library provides a framework for high level "widgets" or controls as well as a couple of basic controls and the ability to be extended with your own controls with a relatively small amount of effort. It handles the dirty rectangle tracking, screen/control rendering, and any touch display input.

Let's cover the major elements:

control<>

This template base class sits at the core of the library as one of the fundamental building blocks. Every widget/control publicly derives from this template class. It provides some basic members for locating it on the screen, painting itself, requesting a repaint of all or a portion of the control, and responding to touch.

screen<>

The screen is responsible for keeping track of all the controls, rendering them, and forwarding touch events to the appropriate control. In order to render efficiently, it uses a dirty rectangle scheme. It also supports multiple render buffers for maximum throughput when using DMA.

label<>

This is concrete control that simply shows some text on the screen, with an optional background and border color.

push_button<>

This control provides a button that responds to being touched. Otherwise, it behaves like the label<>.

svg_box<>

This control displays a SVG image document (svg_doc).

Note: The project also provides a svg_box_touch<> control in /src/main.cpp that demonstrates how to extend a control, and adds touch to a SVG image. However, it is not part of UIX itself.

htcw_gfx

This library provides the fundamental operations used to actually draw the controls. In short, it's a graphics library. It sits between htcw_uix and the ESP LCD Panel API and provides critical drawing primitives, image support and text for rendering a display. Most of the core functionality therein is provided by the draw class. The documentation is here.

ESP LCD Panel API

This is part of the ESP-IDF, although it's callable from Arduino and provides the low level interfacing with the display controller and the bus which connects it. Basically, it configures the display and then knows how to talk to it enough that it can eat bitmaps and transfers them to the display asynchronously using DMA. htcw_uix feeds it htcw_gfx generated bitmaps, which it then sends to the display hardware over the indicated bus, which may be SPI, RGB (ESP32-S3 only so far in lcd_init.h), i8080 or I2C. The core functionality is what is tapped for initializing the display. The rest is covered by a handful of methods. We'll go over it when we cover the code next.

Coding this Mess

I've kept the core functionality to /src/main.cpp so we'll cover that first:

main.cpp

#include <Arduino.h>

#include <Wire.h>

#include "config.h"

#include "lcd_config.h"

#define LCD_IMPLEMENTATION

#include "lcd_init.h"

#include <gfx.hpp>

using namespace gfx;

#include <uix.hpp>

using namespace uix;

// SVG converted to header using

// https://honeythecodewitch.com/gfx/converter

#include "bee_icon.hpp"

static const_buffer_stream& svg_stream = bee_icon;

// downloaded from fontsquirrel.com and header generated with

// https://honeythecodewitch.com/gfx/generator

//#include "fonts/Rubik_Black.hpp"

//#include "fonts/Telegrama.hpp"

#include "fonts/OpenSans_Regular.hpp"

static const open_font& text_font = OpenSans_Regular;Most of this is just includes except for the two lines that alias the svg document stream and the font. The first stuff is core, and includes Arduino, the configuration settings for the selected device, and lcd_init.h which includes the ESP LCD Panel API initialization and interfacing code. Following that is the higher level stuff, like htcw_gfx, htcw_uix, an SVG image, and our font as embedded objects.

The aliases simply make the font and SVG document from the header files easy to change.

#ifdef LCD_TOUCH

void svg_touch();

void svg_release();

#endif // LCD_TOUCH

// declare a custom control

template <typename PixelType,

typename PaletteType = gfx::palette<PixelType, PixelType>>

class svg_box_touch : public svg_box<PixelType, PaletteType> {

// public and private type aliases

// pixel_type and palette_type are

// required on any control

public:

using type = svg_box_touch;

using pixel_type = PixelType;

using palette_type = PaletteType;

private:

using base_type = svg_box<PixelType, PaletteType>;

using control_type = control<PixelType, PaletteType>;

using control_surface_type = typename control_type::control_surface_type;

public:

svg_box_touch(invalidation_tracker& parent,

const palette_type* palette = nullptr)

: base_type(parent, palette) {

}

#ifdef LCD_TOUCH

virtual bool on_touch(size_t locations_size,

const spoint16* locations) {

svg_touch();

return true;

}

virtual void on_release() {

svg_release();

}

#endif // LCD_TOUCH

};This is a custom control we use to render an SVG. There are only three built in controls in htcw_uix at the moment, so it pays to understand how to make your own. It's not really that difficult, especially after you've done it once. Specifically you need to publicly inherit from control<> or a derived control passing a couple of template arguments, and implement a constructor that takes a couple of arguments to forward to the base class. Finally, you implement methods like on_paint(), and on_touch()/on_release() to handle drawing and touching the control.

With on_paint(), you use htcw_gfx to draw to the destination that's passed in. This destination is the size of the control and starts at (0,0). You also have a clipping rectangle you can optionally use to determine which part of the control needs to be redrawn.

In on_touch(), you receive an array of locations relative to your control (starting at 0,0) and your job is to hit test your control to determine if it was touched, to react to the touch, and to pass true if the hit test happened, or false if it didn't. The hit testing is optional. If you always return true, the hit rest region is effectively the entire rectangle of your control's bounds, but the hit testing allows you to handle non-rectangular areas. The reason you get an array of touch locations is because some touch hardware allows you to gesture and can therefore return multiple points.

on_release() simply notifies your control when it is no longer being touched.

Note: In the above code, only devices with LCD_TOUCH defined handle touch.

// declare the format of the screen

using screen_t = screen<LCD_WIDTH, LCD_HEIGHT, rgb_pixel<16>>;

// declare the control types to match the screen

#ifdef LCD_TOUCH

// since this supports touch, we use an interactive button

// instead of a static label

using label_t = push_button<typename screen_t::pixel_type>;

#else

using label_t = label<typename screen_t::pixel_type>;

#endif // LCD_TOUCH

using svg_box_t = svg_box_touch<typename screen_t::pixel_type>;

// for access to RGB565 colors which LCDs and the main screen use

using color16_t = color<rgb_pixel<16>>;

// for access to RGBA8888 colors which controls use

using color32_t = color<rgba_pixel<32>>;

#ifdef PIN_NUM_BUTTON_A

// declare the buttons if defined

using button_a_t = int_button<PIN_NUM_BUTTON_A, 10, true>;

#endif // PIN_NUM_BUTTON_A

#ifdef PIN_NUM_BUTTON_B

using button_b_t = int_button<PIN_NUM_BUTTON_B, 10, true>;

#endif

// if we have no inputs, declare

// a timer

#if !defined(PIN_NUM_BUTTON_A) && \

!defined(PIN_NUM_BUTTON_B) && \

!defined(LCD_TOUCH)

using cycle_timer_t = uix::timer;

#endif // !defined(PIN_NUM_BUTTON_A) ...

// declare touch if available

#ifdef LCD_TOUCH

using touch_t = LCD_TOUCH;

#endif // LCD_TOUCHHere, we have some type aliases to make everything easier. Note that some of it is conditional based on the hardware you're using, like with the ESP Display S3, the label is actually a button so that it can respond to being touched. The TTGO uses physical buttons, while the ESP_WROVER_KIT has no inputs so it relies on a timer to make the display animate.

Next we have our global variables. Due to the fact that Arduino segregates your application into setup() and loop(), it basically demands the use of globals to share data between the two routines.

// UIX allows you to use two buffers for maximum DMA efficiency

// you don't have to, but performance is significantly better

// declare 64KB across two buffers for transfer

// RGB mode is the exception. We don't need two buffers

// because the display works differently.

#ifndef LCD_PIN_NUM_HSYNC

constexpr static const int lcd_buffer_size

= 32 * 1024;

uint8_t lcd_buffer1[lcd_buffer_size];

uint8_t lcd_buffer2[lcd_buffer_size];

#else

constexpr static const int lcd_buffer_size

= 64 * 1024;

uint8_t lcd_buffer1[lcd_buffer_size];

uint8_t* lcd_buffer2=nullptr;

#endif // !LCD_PIN_VSYNC

// our svg doc for svg_box

svg_doc doc;

// the main screen

screen_t main_screen(sizeof(lcd_buffer1),

lcd_buffer1,

lcd_buffer2);

// the controls

label_t test_label(main_screen);

svg_box_t test_svg(main_screen);

#ifdef PIN_NUM_BUTTON_A

button_a_t button_a;

#endif

#ifdef PIN_NUM_BUTTON_B

button_b_t button_b;

#endif

#ifdef EXTRA_DECLS

EXTRA_DECLS

#endif // EXTRA_DECLSHere, we declare some globals for the LCD panel. htcw_uix can utilize DMA transfers on supporting platforms. In order to do so efficiently, it can send one buffer while writing to the other. If you use two buffers instead of one, it increases performance. Here we use two 32KB buffers. Note that the buffers must be the same size. The exception is RGB mode, since - while it uses DMA - it does so at a different level, and isn't controlled at the user level. At this level, only one buffer is needed, so we declare it using the full size of our transfer memory (64KB).

// button callbacks

#ifdef PIN_NUM_BUTTON_A

void button_a_on_click(bool pressed, void* state) {

if (pressed) {

test_label.text_color(color32_t::red);

} else {

test_label.text_color(color32_t::blue);

}

}

#endif // PIN_NUM_BUTTON_A

#ifdef PIN_NUM_BUTTON_B

void button_b_on_click(bool pressed, void* state) {

if (pressed) {

main_screen.background_color(color16_t::light_green);

} else {

main_screen.background_color(color16_t::white);

}

}

#endif // PIN_NUM_BUTTON_B

// no inputs

#if !defined(PIN_NUM_BUTTON_A) && \

!defined(PIN_NUM_BUTTON_B) && \

!defined(LCD_TOUCH)

int cycle_state = 0;

cycle_timer_t cycle_timer(1000, [](void* state) {

switch (cycle_state) {

case 0:

main_screen.background_color(color16_t::light_green);

break;

case 1:

main_screen.background_color(color16_t::white);

break;

case 2:

test_label.text_color(color32_t::red);

break;

case 3:

test_label.text_color(color32_t::blue);

break;

}

++cycle_state;

if (cycle_state > 3) {

cycle_state = 0;

}

});

#endif // !defined(PIN_NUM_BUTTON_A) ...

// touch inputs

#ifdef LCD_TOUCH

void svg_touch() {

main_screen.background_color(color16_t::light_green);

}

void svg_release() {

main_screen.background_color(color16_t::white);

}

#endif // LCD_TOUCH

#ifdef LCD_TOUCH

#ifdef LCD_TOUCH_WIRE

touch_t touch(LCD_TOUCH_WIRE);

#else

touch_t touch;

#endif // LCD_TOUCH_WIRE

static void uix_touch(point16* out_locations,

size_t* in_out_locations_size,

void* state) {

if(in_out_locations_size<=0) {

*in_out_locations_size=0;

return;

}

#ifdef LCD_TOUCH_IMPL

LCD_TOUCH_IMPL

#else

in_out_locations_size = 0;

return;

#endif // LCD_TOUCH_IMPL

}

#endif // LCD_TOUCHThis is where we provide the code to handle inputs (example: TTGO T1 Display/ESP Display S3) or set up the timer (example: ESP_WROVER_KIT) which in each case gives a way to animate the screen. The TTGO alters the screen colors when you hit one the buttons. The ESP Display alters the screen colors when you touch the text or the SVG image. The ESP_WROVER_KIT simply cycles through the screen colors once each a second. Each device functions according to its available inputs.

You can see the actual changing of the colors is very easy, simply requiring us to set the appropriate member value. The screen will handle keeping it and its controls up to date as necessary.

The touch handling routine for the ESP Display S3 could use a bit of an explanation. UIX potentially supports gestures, and so does the ESP Display S3's touch surface. Therefore, it's possible to use multiple fingers to generate multiple touch points. When the callback is invoked in_out_locations_size starts with the number of available slots in the out_locations array. We populate those with available values depending on what our touch hardware reports.

#ifndef LCD_PIN_NUM_HSYNC

// not used in RGB mode

// tell UIX the DMA transfer is complete

static bool lcd_flush_ready(esp_lcd_panel_io_handle_t panel_io,

esp_lcd_panel_io_event_data_t* edata,

void* user_ctx) {

main_screen.set_flush_complete();

return true;

}

#endif // LCD_PIN_NUM_HSYNC

// tell the lcd panel api to transfer the display data

static void uix_flush(point16 location,

typename screen_t::bitmap_type& bmp,

void* state) {

int x1 = location.x,

y1 = location.y,

x2 = location.x + bmp.dimensions().width-1,

y2 = location.y + bmp.dimensions().height-1;

lcd_panel_draw_bitmap( x1, y1, x2, y2, bmp.begin());

// if we're in RGB mode:

#ifdef LCD_PIN_NUM_HSYNC

// flushes are immediate in rgb mode

main_screen.set_flush_complete();

#endif // LCD_PIN_NUM_HSYNC

}These two callbacks handle interfacing htcw_uix with the ESP Panel Display API. The first one tells htcw_uix that the screen's flush is complete. This lets it know that it can now use the transfer buffer again. This is necessary for everything except RGB mode.

The second callback is what sends the bitmap data from htcw_uix to the display's controller. The bitmaps are portions of the screen up to the size specified when you initialized the screen - in this case, 32KB in the case of non-RGB interfaces, and 64KB otherwise. bmp.begin() gives us a pointer to the start of the data in the bitmap. Note that for RGB mode, we immediately set the screen's flush completion, because it isn't a background transfer.

// initialize the screen and controls

void screen_init() {

test_label.bounds(srect16(spoint16(0, 10), ssize16(200, 60))

.center_horizontal(main_screen.bounds()));

test_label.text_color(color32_t::blue);

test_label.text_open_font(&text_font);

test_label.text_line_height(45);

test_label.text_justify(uix_justify::center);

test_label.round_ratio(NAN);

test_label.padding({8, 8});

test_label.text("Hello!");

// make the backcolor transparent

auto bg = color32_t::black;

bg.channel<channel_name::A>(0);

test_label.background_color(bg);

// and the border

test_label.border_color(bg);

#ifdef LCD_TOUCH

test_label.pressed_text_color(color32_t::red);

test_label.pressed_background_color(bg);

test_label.pressed_border_color(bg);

#endif // LCD_TOUCH

test_svg.bounds(srect16(spoint16(0, 70), ssize16(60, 60))

.center_horizontal(main_screen.bounds()));

gfx_result res = svg_doc::read(&svg_stream, &doc);

if (gfx_result::success != res) {

Serial.printf("Error reading SVG: %d", (int)res);

}

test_svg.doc(&doc);

main_screen.background_color(color16_t::white);

main_screen.register_control(test_label);

main_screen.register_control(test_svg);

main_screen.on_flush_callback(uix_flush);

#ifdef LCD_TOUCH

main_screen.on_touch_callback(uix_touch);

#endif // LCD_TOUCH

}This routine is pretty straightforward, and it works a lot like the InitializeComponent() routine if you've ever used WinForms in .NET. It just initializes each of the control's "properties", and then finally, it registers each control with the screen and then sets up the main screen's callback(s).

In this case, it also loads the SVG document to use with svg_box_touch<>.

Note that we set the label's background and border colors to a transparent color which we created by taking an existing color (black, though it doesn't matter) and setting the alpha channel (channel_name::A) to zero.

// set up the hardware

void setup() {

Serial.begin(115200);

#ifdef I2C_PIN_NUM_SDA

Wire.begin(I2C_PIN_NUM_SDA,I2C_PIN_NUM_SCL);

#endif // I2C_PIN_NUM_SDA

#ifdef EXTRA_INIT

EXTRA_INIT

#endif //EXTRA_INIT

// RGB mode uses a slightly different call:

#ifdef LCD_PIN_NUM_HSYNC

lcd_panel_init();

#else

lcd_panel_init(sizeof(lcd_buffer1),lcd_flush_ready);

#endif // LCD_PIN_NUM_HSYNC

screen_init();

#ifdef PIN_NUM_BUTTON_A

button_a.initialize();

button_a.on_pressed_changed(button_a_on_click);

#endif // PIN_NUM_BUTTON_A

#ifdef PIN_NUM_BUTTON_B

button_b.initialize();

button_b.on_pressed_changed(button_b_on_click);

#endif // PIN_NUM_BUTTON_B

}We've already done a lot of the initialization work so setup() has very little to do except invoke what we've already created above. Here, we initialize the primary serial port, and the I2C bus, call our initialization routines from earlier, and depending on our device, we initialize the buttons and we also allow for any defined extra initialization code in EXTRA_INIT.

// keep our stuff up to date and responsive

void loop() {

#ifdef PIN_NUM_BUTTON_A

button_a.update();

#endif // PIN_NUM_BUTTON_A

#ifdef PIN_NUM_BUTTON_B

button_b.update();

#endif // PIN_NUM_BUTTON_B

#if !defined(PIN_NUM_BUTTON_A) && \

!defined(PIN_NUM_BUTTON_B) && \

!defined(LCD_TOUCH)

cycle_timer.update();

#endif // !defined(PIN_NUM_BUTTON_A) ...

main_screen.update();

}Here in loop(), we simply give our relevant objects a chance to process. For the buttons, this causes press events to fire as the buttons are clicked. For the timer, this gives it a chance to fire the callback on an interval, and for the screen, this gives it a chance to process touch events and to re-render any dirty areas of the screen.

/lib Folder

Under this folder are a couple of drivers for the ILI9341 and ILI9488 display controllers that allow them to be used with the ESP LCD Panel API. Writing a driver is a little bit involved, but if you've done it before, you're probably up to the task. You can rip initialization codes from an existing codebase and then tweak the existing code as necessary. Other than that, exploring these drivers is outside the scope here.

lcd_config.h and lcd_init.h

These files are part of my lcd_init package here. These files can be reused in other projects to initialize the ESP LCD Panel API and provide supporting code for RGB mode.

config.h

This file sets up any non-LCD related configuration.

#ifndef CONFIG_H

#define CONFIG_H

#ifdef TTGO_T1

#define PIN_NUM_BUTTON_A 35

#define PIN_NUM_BUTTON_B 0

#include <button.hpp>

using namespace arduino;

#endif // TTGO_T1

#ifdef ESP_WROVER_KIT

#include <esp_lcd_panel_ili9341.h>

#endif // ESP_WROVER_KIT

#ifdef ESP_DISPLAY_S3

#define I2C_PIN_NUM_SDA 38

#define I2C_PIN_NUM_SCL 39

#define LCD_TOUCH ft6236<LCD_HRES, LCD_VRES>

#define LCD_ROTATION 1

#define EXTRA_INIT \

touch.initialize(); \

touch.rotation(LCD_ROTATION);

#define LCD_TOUCH_IMPL \

if (touch.update()) { \

if (touch.xy(&out_locations[0].x, &out_locations[0].y)) { \

if (*in_out_locations_size > 1) { \

*in_out_locations_size = 1; \

if (touch.xy2(&out_locations[1].x, &out_locations[1].y)) { \

*in_out_locations_size = 2; \

} \

} else { \

*in_out_locations_size = 1; \

} \

} else { \

*in_out_locations_size = 0; \

} \

}

#include <esp_lcd_panel_ili9488.h>

#include <ft6236.hpp>

using namespace arduino;

#endif // ESP_DISPLAY_S3

#ifdef ESP_DISPLAY_4INCH

#define I2C_PIN_NUM_SDA 17

#define I2C_PIN_NUM_SCL 18

#define LCD_TOUCH_PIN_NUM_RST 38

#define LCD_TOUCH gt911<LCD_TOUCH_PIN_NUM_RST>

#define LCD_TOUCH_IMPL \

touch.update(); \

size_t touches = touch.locations_size(); \

if (touches) { \

if (touches > *in_out_locations_size) { \

touches = *in_out_locations_size; \

} \

decltype(touch)::point pt[5]; \

touch.locations(pt, &touches); \

for (uint8_t i = 0; i < touches; i++) { \

out_locations[i].x = pt[i].x; \

out_locations[i].y = pt[i].y; \

} \

} \

*in_out_locations_size = touches;

#define EXTRA_INIT touch.initialize();

#include <gt911.hpp>

using namespace arduino;

#endif // ESP_DISPLAY_4INCH

#ifdef M5STACK_CORE2

#define LCD_TOUCH ft6336<LCD_HRES, LCD_VRES, -1>

#define LCD_TOUCH_WIRE Wire1

#define EXTRA_DECLS m5core2_power power;

#define EXTRA_INIT power.initialize();

#include <esp_lcd_panel_ili9342.h>

#include <m5core2_power.hpp>

#include <ft6336.hpp>

using namespace arduino;

#endif // M5STACK_CORE2

#ifdef M5STACK_FIRE

#define PIN_NUM_BUTTON_A 39

#define PIN_NUM_BUTTON_B 38

#define PIN_NUM_BUTTON_C 37

#include <esp_lcd_panel_ili9342.h>

#include <button.hpp>

using namespace arduino;

#endif // M5STACK_FIRE

#ifdef T_DISPLAY_S3

#define PIN_NUM_BUTTON_A 0

#define PIN_NUM_BUTTON_B 14

#define PIN_NUM_POWER 15

#define EXTRA_INIT \

pinMode(PIN_NUM_POWER, OUTPUT); \

digitalWrite(PIN_NUM_POWER, HIGH);

#include <button.hpp>

using namespace arduino;

#endif // T_DISPLAY_S3

#endif // CONFIG_HThis file sets up each device, including device specific files, setting up the touch handler to return any touch location(s), managing any power options, etc.

History

- 3rd March, 2023 - Initial submission

- 20th March, 2023 - Refactor, support more devices and RGB mode